Apache Spark is a powerful open-source engine designed for large-scale data processing and analytics.

Its ability to handle massive datasets with speed and efficiency makes it a crucial tool in fields like data science, machine learning, and business intelligence.

By learning Apache Spark, you can gain the skills to process terabytes of data, build data pipelines, and develop advanced analytics solutions, opening doors to exciting career opportunities in today’s data-driven world.

Finding the right Apache Spark course can be challenging, with a vast array of options available online.

You’re looking for a comprehensive and engaging course that covers the core concepts of Spark, provides practical experience, and equips you with the skills needed to tackle real-world data challenges.

Based on our analysis, the best Apache Spark course overall is Apache Spark with Scala - Hands On with Big Data! offered on Udemy.

This course provides a comprehensive introduction to Spark, covering core concepts like RDDs, SparkSQL, DataFrames, and even advanced topics like machine learning with MLlib and Spark Streaming.

The hands-on approach with real-world examples makes it ideal for both beginners and those with some experience seeking to deepen their Spark expertise.

While this is our top recommendation, we understand that you might be looking for courses tailored to specific programming languages or learning preferences.

We’ve reviewed a variety of other Apache Spark courses to provide you with diverse options.

Continue reading to explore our curated list of courses and find the perfect fit for your Apache Spark learning journey.

Apache Spark with Scala - Hands On with Big Data!

Provider: Udemy

This Udemy course will teach you how to build data-driven applications using Apache Spark.

You’ll begin by setting up your development environment using IntelliJ and SBT, then write a simple Scala program in Spark.

Through a crash course, you’ll master Scala’s syntax and structure, including flow control, functions, and data structures.

You’ll then delve into Resilient Distributed Datasets (RDDs), the building blocks of Spark programming.

You’ll discover how to use RDDs to manipulate and analyze data, using real-world examples like counting movie ratings or finding the average number of friends by age in a social network.

The course introduces you to SparkSQL, DataFrames, and DataSets — advanced tools for working with structured data.

You’ll learn to use SQL queries to analyze data and solve complex problems.

You’ll then explore how to run Spark on a cluster using Amazon EMR and Hadoop YARN, gaining the skills to use Spark in a production environment.

You’ll learn how to package your scripts and troubleshoot Spark jobs on a cluster.

The course doesn’t shy away from advanced topics.

You’ll dive into machine learning with Spark MLlib, using algorithms like collaborative filtering and linear regression on large datasets.

You’ll also explore Spark Streaming, learning how to process real-time data streams for tasks like monitoring Twitter hashtags and analyzing log files.

You’ll even work with GraphX, a library for analyzing and traversing graphs using Spark.

Apache Spark 2.0 with Java -Learn Spark from a Big Data Guru

Provider: Udemy

This Apache Spark course teaches you how to use Spark, a powerful tool for handling lots of data.

You begin by setting up your computer using Java, Eclipse, and IntelliJ IDEA.

You even learn how to install Git and run your first Spark job, troubleshooting any problems along the way, especially if you’re using Windows.

Next, you’ll discover Resilient Distributed Datasets (RDDs).

You’ll learn to create RDDs and use transformations like map, filter, and flatMap to manipulate data.

You’ll also practice actions like reduce and collect.

Understanding concepts like caching and persistence will help you improve your Spark applications’ performance.

As you progress, you’ll explore how Spark works and its main parts.

You’ll learn about Spark SQL, a powerful tool for analyzing structured data.

Through hands-on exercises like the house price problem, you’ll gain practical experience using Spark SQL.

You’ll even discover Datasets, a different way to work with data compared to RDDs, and learn how to switch between them.

Finally, you’ll learn to run your Spark applications on a cluster, which is like a network of computers working together.

You’ll discover how to package your application and use tools like spark-submit to run it on the cluster.

The course also teaches you how to use Amazon EMR to run your applications on a large cluster, giving you valuable skills for handling massive datasets.

Distributed Computing with Spark SQL

Provider: Coursera

This course teaches you how to use Spark SQL to work with tons of data.

You’ll start by learning why distributed computing is important and how Spark DataFrames help manage all that information.

The course uses Databricks, a platform that makes working with Spark easier.

You’ll learn to write Spark SQL queries in notebooks, which are like digital documents where you can write and run code.

You’ll then go deeper into how Spark works, learning about things like caching, which makes your queries faster, and shuffle partitions, which is how Spark organizes data across multiple computers.

You’ll even explore the Spark UI, a visual tool that helps you understand your Spark jobs.

The course also covers how to connect Spark to different data sources like files, databases, and APIs.

You’ll work with different file formats, including JSON, and learn how to structure your data using schemas.

Finally, the course will teach you about data pipelines and the differences between data lakes and data warehouses.

You’ll discover Delta Lake, a powerful tool for managing data, and learn how to use its features to create a “lakehouse” architecture.

You’ll even learn how to use Delta Lake to build data pipelines to process and analyze your data.

Spark, Hadoop, and Snowflake for Data Engineering

Provider: Coursera

The course kicks off with Hadoop, the backbone of distributed computing, before diving into Spark, a lightning-fast data processing engine.

You’ll quickly get your hands dirty with Resilient Distributed Datasets (RDDs), the ingenious building blocks of Spark, and learn to wield the power of PySpark, a Python library that unlocks Spark’s full potential.

You’ll master Spark SQL, a robust language for querying data within Spark, through hands-on demos and practice exercises.

Once you’ve conquered Spark and Hadoop, you’ll transition to Snowflake, a cloud-based data warehousing platform.

You’ll become proficient in navigating its web interface, creating tables, writing data, and accessing it seamlessly using the Python connector.

The course then introduces Databricks, a cloud-based platform for Apache Spark, where you’ll explore advanced features for machine learning and data engineering.

Finally, you’ll delve into the world of MLOps, a set of best practices for building and deploying machine learning models, using tools like MLflow, and explore DevOps and DataOps principles for automating and streamlining data engineering workflows.

You’ll learn how to build robust cloud pipelines using AWS Step Functions, Lambda, and other powerful tools.

Apache Spark 3 - Spark Programming in Python for Beginners

Provider: Udemy

This course on Apache Spark 3 is designed to take you from beginner to proficient in using Python to work with Spark.

You’ll start with the basics, building a strong understanding of Big Data and how solutions like Apache Spark address the challenges of processing massive datasets.

You’ll learn about Hadoop, a cornerstone of Big Data processing, and its evolution, and discover how Data Lakes offer a modern approach to storing and managing vast amounts of data.

The course guides you through setting up your Spark environment.

You’ll learn how to configure Spark both locally on your computer and in the cloud using platforms like Databricks.

You’ll create your first Spark applications, working with Spark DataFrames, which are powerful structures for organizing and manipulating data within Spark.

You’ll write queries using Spark SQL, a language designed specifically for working with data in Spark.

The heart of the course dives into the architecture and execution model of Spark.

You’ll discover how Spark distributes your code across a cluster of machines, allowing it to process huge datasets efficiently.

You’ll explore different execution modes, understanding how to run your Spark applications in various environments.

The course breaks down the Spark programming model, teaching you how to structure your Spark projects, manage Spark sessions, and build complete Spark applications.

You’ll explore Spark’s APIs, including RDDs and the Structured API, gaining the skills to work with data in different formats like CSV, JSON, and Parquet.

You’ll learn how to connect to various data sources, define your own data schemas, and even work with Spark SQL tables.

The course covers data transformation techniques, showing you how to manipulate data within DataFrames, create user-defined functions, and perform aggregations to extract meaningful insights from your data.

Finally, you’ll put all your skills into practice with a capstone project.

This project challenges you to build a real-world Spark application, requiring you to apply your knowledge of data transformation, integration with tools like Kafka, and resource estimation.

You’ll even learn how to manage your project using source control and set up a CI/CD pipeline, essential skills for any aspiring Spark developer.

Taming Big Data with Apache Spark and Python - Hands On!

Provider: Udemy

This course begins with setting up your Spark development environment on your Windows computer.

You will install the required software, including Anaconda, a JDK, and Apache Spark itself.

You will then test your setup by running a simple Spark script that analyzes movie ratings from the MovieLens dataset.

You will then explore the fundamentals of Spark, including Resilient Distributed Datasets (RDDs).

You will learn how to perform transformations and actions on data using RDDs, work with key/value pairs, filter RDDs, and practice these concepts through hands-on exercises.

For example, you’ll learn how to find the minimum and maximum temperatures from a weather dataset.

This course then introduces SparkSQL, DataFrames, and DataSets, showing you how to efficiently handle structured data and execute SQL-style queries on DataFrames using Spark and Python.

You will also learn how to run Spark on a cluster using Amazon’s Elastic MapReduce (EMR) service and work with Spark ML (MLLib), applying machine learning algorithms to large datasets.

Finally, you will be introduced to Spark Streaming and Structured Streaming, learning how to process streaming data in real time.

You’ll finish the course with a solid understanding of Spark, ready to tackle your own big data challenges.

You’ll have the skills to manipulate data, perform analysis, and even apply machine learning using Spark.

NoSQL, Big Data, and Spark Foundations Specialization

Provider: Coursera

The specialization begins by introducing you to NoSQL databases and their advantages over traditional databases.

You’ll work with popular NoSQL systems like MongoDB and Cassandra, mastering database creation, data querying, and management of permissions.

You’ll also explore cloud databases such as IBM Cloudant and learn the difference between ACID and BASE consistency models, which helps you choose the right database for your project.

The specialization then immerses you in the world of Big Data using Apache Hadoop and its components like HDFS, MapReduce, and Hive.

You’ll gain practical experience in processing large datasets and learn how to use tools like Docker, Kubernetes, and Python for real-world applications.

You’ll then dive into Apache Spark, mastering its components like DataFrames and SparkSQL for efficient Big Data processing.

Finally, you’ll explore machine learning with Spark, learning supervised and unsupervised learning techniques to build and deploy machine learning models using SparkML.

You’ll get to perform regression, classification, and clustering tasks, gaining hands-on experience in building data engineering and machine learning pipelines.

The specialization even introduces you to Generative AI, a cutting-edge area of machine learning, equipping you with valuable skills for a data engineering career.

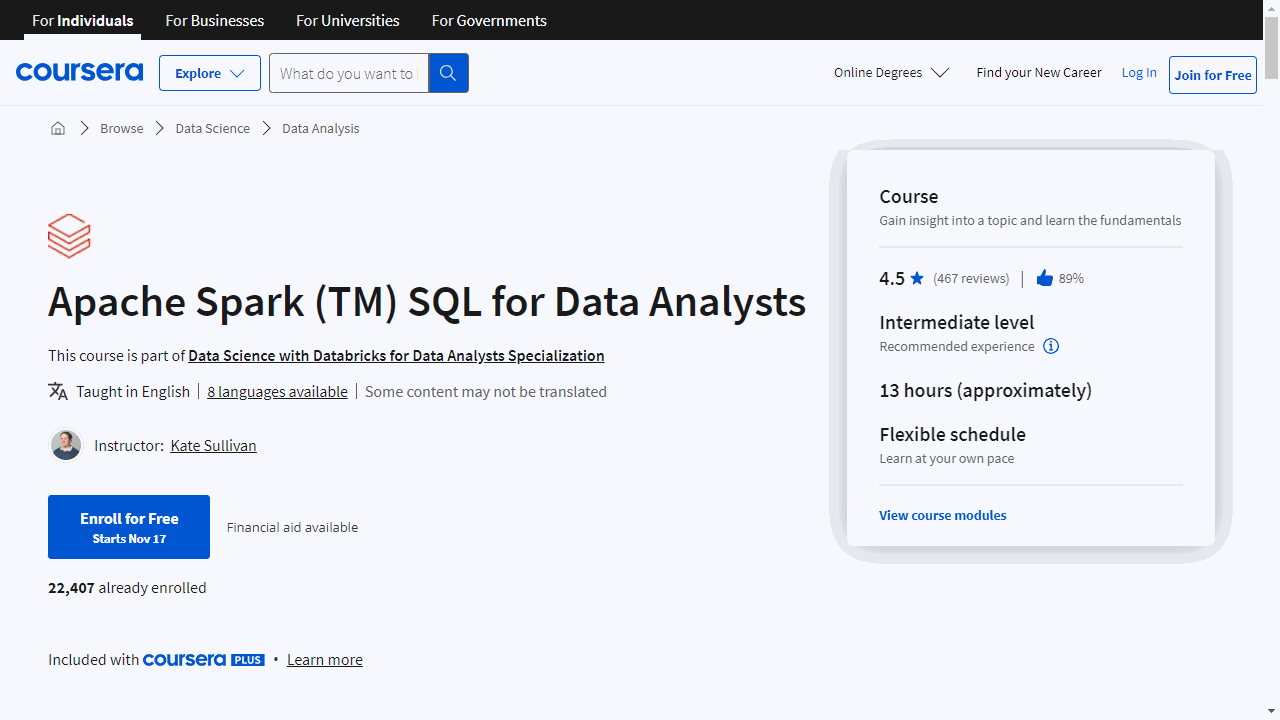

Apache Spark (TM) SQL for Data Analysts

Provider: Coursera

This Apache Spark SQL course on Coursera equips you with the skills needed to analyze big data.

You will begin by understanding the difficulties of working with big data and how Apache Spark provides solutions.

The course guides you in setting up your environment on Databricks Community Edition, a user-friendly platform for working with Spark.

You will master Spark SQL, a powerful language for querying and analyzing data within Apache Spark.

You learn to write queries, explore data through visualizations, and conduct exploratory data analysis.

The course then teaches you how to optimize your Spark SQL queries for faster processing using techniques like caching and selective data loading.

You will then move on to more complex data management tasks.

You will learn how to handle nested data, a common challenge in real-world datasets, and manipulate it using techniques like data munging.

The course delves into strategies for managing this data, including how to partition tables for quicker access.

You learn how to aggregate and summarize data to gain insights for better decision-making.

Finally, the course introduces Delta Lake, a sophisticated technology that merges the strengths of data lakes and data warehouses, enabling you to manage data with improved consistency and reliability.

Also check our posts on: