Finding a good Azure Data Factory course on Udemy can be a real challenge, especially with so many options available.

You want a course that’s comprehensive, engaging, and taught by experienced instructors.

Most importantly, you need to make sure it aligns with your learning style and goals.

We’ve carefully reviewed the best Azure Data Factory courses on Udemy and narrowed it down to our top picks.

For the best overall Azure Data Factory course, we recommend Azure Data Factory : from Zero to Hero of Azure Data Factory.

This course offers a comprehensive and practical approach, taking you from the fundamentals to advanced techniques.

The author covers a wide range of topics, including pipelines, activities, linked services, datasets, and triggers, all while providing real-world examples to solidify your understanding.

If this isn’t quite what you’re looking for, don’t worry.

We have plenty of other great recommendations for beginners, intermediate learners, and experts.

We also have courses focused on specific aspects of Azure Data Factory like Continuous Integration and Continuous Delivery, as well as courses that delve into the integration with Synapse Analytics.

Keep reading for a complete list of our top picks and detailed reviews for each course!

Azure Data Factory For Data Engineers - Project on Covid19

Best Udemy course for beginners who want to build a real-world data pipeline with Azure Data Factory.

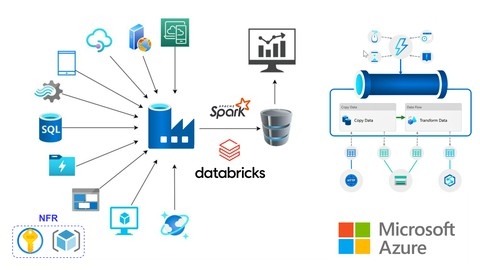

This Azure Data Factory course takes a hands-on approach to building a real-world data pipeline using COVID-19 data as its core project.

You’ll start by mastering the fundamental components of Azure Data Factory – Linked Services, Data Sets, and Pipelines – and learn how to extract, transform, and load (ETL) data from various sources, including Azure Blob Storage and HTTP endpoints.

Next, you’ll dive into the power of Azure Data Flows, a visual tool that empowers you to clean, reshape, and prepare your data for analysis.

Through transformations like Filter, Select, and Pivot, you’ll learn how to manipulate your COVID-19 data, uncovering valuable insights that would otherwise be hidden.

The course extends beyond basic ETL, introducing you to advanced analytics with HDInsight and Databricks.

You’ll gain experience processing data using Hive scripts and exploring data analysis in the cloud, gaining a solid foundation for working with big data and integrating Azure Data Factory with powerful analytics platforms.

But this course isn’t just about building pipelines; it’s also about making them production-ready.

You’ll learn how to monitor and alert on pipeline performance using Azure Monitor and Log Analytics, and implement Continuous Integration and Continuous Delivery (CI/CD) with Azure DevOps for automated deployments and increased reliability.

Throughout the course, you’ll work with tools like Power BI for reporting and Git for code management.

You’ll also delve into the complexities of managing access to Data Lake Storage, exploring solutions using both Managed Identities and Key Vault, enhancing security and control within your data infrastructure.

This comprehensive course offers a practical and in-depth journey into the world of Azure Data Factory.

From building your first data pipeline to implementing advanced analytics and production-ready deployments, you’ll gain the skills and knowledge to confidently tackle real-world data challenges using Azure Data Factory.

Mastering Azure Data Factory: From Basics to Advanced Level

Best Udemy course for beginners looking to gain hands-on experience with Azure Data Factory fundamentals.

You’ll start by gaining a solid understanding of cloud computing fundamentals and setting up your Azure environment.

The course then dives deep into Azure Data Factory, covering its core components, including building blocks like data sets, linked services, and pipelines.

You’ll learn to copy data efficiently using various methods and scenarios, mastering the art of transforming data using powerful Control Flow and Data Flow activities.

You’ll gain hands-on experience with activities like Lookup, Get Metadata, Filter, and For Each, and explore the capabilities of data flow transformations such as Source, Join, Select, Sort, Exists, Derived Column, and many more.

The course emphasizes practical application, guiding you through the process of parameterizing your Data Factory components for reusability and flexibility.

You’ll also learn how to monitor your pipelines effectively, both visually and using Azure Monitor, ensuring optimal performance and data flow.

The real-world scenarios addressed in the course are particularly valuable.

You’ll delve into techniques like incremental data load, essential for managing large data sets efficiently.

You’ll also explore the implementation of Slowly Changing Dimensions (SCDs), a crucial concept for handling data changes in a data warehouse environment.

The included labs, featuring provided artifacts, offer hands-on practice with these concepts, solidifying your understanding.

While the course provides a solid foundation in Azure Data Factory, it’s worth noting that it’s primarily focused on the fundamentals.

If you’re looking for an advanced course that dives deeper into specific aspects of Data Factory, such as advanced data transformation techniques or complex data integration scenarios, this might not be the ideal choice.

It provides a strong foundation for building data pipelines and can serve as a springboard for further exploration of advanced Azure Data Factory concepts.

Azure Data Factory for Beginners - Build Data Ingestion

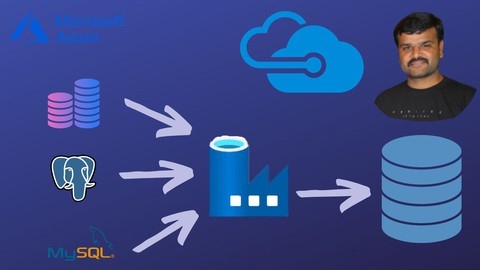

Best Udemy course for beginners and intermediate professionals wanting to master Azure Data Factory engineering skills.

This comprehensive course empowers you to become a skilled Azure Data Factory engineer, guiding you from beginner to proficient.

You’ll embark on a journey covering core data factory concepts alongside a valuable bonus section on infrastructure as code using BICEP.

Starting with the fundamentals, you’ll set up your environment and build your first data pipeline using the Azure Data Factory interface.

You’ll learn to create and manage various resources, including data factory instances, storage accounts, and leveraging Data Lake Gen 2 for efficient data storage.

The course dives deep into metadata-driven ingestion, teaching you how to create Active Directory users, establish security permissions, and design a metadata database using Azure Data Studio.

You’ll gain hands-on experience with creating tables, stored procedures, and optimizing your data management practices.

Moving into event-driven ingestion, you’ll discover how to implement triggers based on events within Azure Storage.

You’ll learn to build datasets, pipelines, and utilize Event Grid for automated data movement, while exploring advanced techniques like dynamic datasets and orchestration pipelines to create robust and efficient data ingestion solutions.

The course concludes with a valuable bonus section on provisioning infrastructure with BICEP.

You’ll dive into Git, Azure DevOps, and BICEP, mastering the art of infrastructure as code.

You’ll learn to build YAML pipelines, compile BICEP files, and deploy infrastructure resources like Log Analytics and Data Factory using BICEP modules.

This section provides a practical foundation for automating your infrastructure provisioning and ensuring consistency across deployments.

The inclusion of a BICEP module provides a valuable advantage for those looking to master infrastructure automation within the Azure ecosystem.

While the course is suitable for beginners, its advanced sections will also be beneficial for experienced data engineers seeking to expand their skillset.

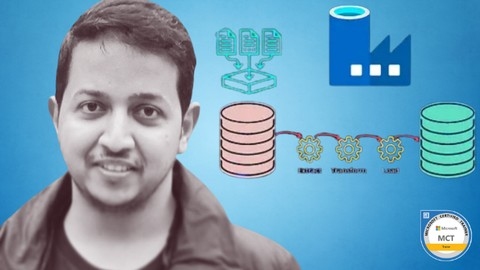

[8 Course BUNDLE]: DP-203: Data Engineering on MS Azure

Best Udemy course for beginners looking to master Azure Data Factory and related technologies.

This comprehensive course bundle is a valuable resource for anyone looking to master Azure Data Factory and related technologies.

It’s a structured journey that starts with the fundamentals of cloud computing, ensuring a solid foundation before diving into the intricacies of Azure SQL Database.

You’ll learn how to create and manage these databases, and the labs on elastic pools, active geo replication, and failover groups provide hands-on experience with crucial real-world scenarios.

The heart of the course lies in Azure Data Factory v2, a powerful tool for building data pipelines.

This section equips you with the skills to efficiently copy, transform, and monitor data flows.

The labs provide a wealth of practical experience, covering activities like copying data, retrieving metadata, filtering, and appending, as well as advanced features like data flows, parameters, and monitoring.

What sets this bundle apart is its emphasis on real-world use cases.

You’ll delve into implementing incremental data loads and slowly changing dimensions (SCD), applying your newly acquired knowledge to solve common challenges encountered in data management.

Beyond Data Factory, the bundle offers modules on essential Azure services like Data Lake Storage Gen2, Synapse Analytics, Cosmos DB, and Stream Analytics.

You’ll gain an understanding of these services and how they integrate with Data Factory, providing a holistic view of the Azure data platform.

For those interested in cloud-based data engineering and machine learning, the Azure Databricks module is a valuable addition.

You’ll learn to create and manage workspaces and utilize Databricks to process and analyze data effectively.

And finally, you’ll discover Power BI, a powerful business intelligence tool.

This section will equip you with the skills to connect to data sources, build compelling reports, and share your insights with stakeholders.

The bundle also includes practice tests for the DP-203, DP-200, and DP-201 certifications, making it an ideal resource for aspiring Azure data engineers.

The combination of theoretical knowledge, practical labs, and real-world use cases provides a solid foundation for building a successful career in Azure data management.

Azure Data Factory | Data Engineering on Azure and Fabric

Best Udemy course for data engineers seeking comprehensive Azure Data Factory training with a focus on Fabric.

This comprehensive course is a journey into the world of Azure Big Data Analytics, equipping you with the skills to design, build, and deploy robust data pipelines.

You’ll start with a solid foundation in Azure storage, learning to work with diverse file formats like JSON and Parquet.

The real meat of the course lies in Azure Data Factory (ADF), where you’ll master the art of data movement and transformation.

You’ll gain hands-on experience with powerful tools like Copy Activity and Data Flow, enabling you to handle various data types, cleanse data, and perform advanced operations like joins and aggregations.

Imagine transforming raw data into actionable insights!

But this course doesn’t stop at the technical aspects.

It guides you through scheduling and chaining pipelines to automate your data processes, introducing you to triggers like tumbling windows and storage events.

This allows you to set up pipelines that execute on a schedule or react to real-time data changes.

Security is paramount, and you’ll learn to control access to your data using Azure Key Vault and managed identities.

This ensures that your data remains safe and secure.

The course delves into the exciting realm of Microsoft Fabric, introducing you to OneLake and Fabric Capacities.

You’ll set up a Fabric trial and explore the power of Data Flow Gen2 for data pipeline creation.

Finally, the course takes you through a complete CI/CD workflow using Azure DevOps, seamlessly integrating your ADF pipelines with your development processes.

You’ll gain a deep understanding of ADF, Azure storage, and data transformation, making you a highly valuable asset to any data engineering team.

Azure Data Factory Training–Continuous Integration/Delivery

Best Udemy course for data engineers seeking comprehensive Azure Data Factory training with a focus on Continuous Integration and Continuous Delivery.

This course provides a comprehensive exploration of Azure Data Factory, with a strong emphasis on establishing a robust Continuous Integration and Continuous Delivery (CI/CD) pipeline for your data workflows.

You’ll delve into the fundamentals of Azure Data Factory, laying a solid foundation for more advanced concepts.

Expect to learn how to leverage parameters and variables for dynamic pipelines, master the creation of self-hosted integration runtimes, and explore the capabilities of data flow activities.

The course guides you through implementing complex data transformations, including the implementation of Slowly Changing Dimensions (SCD) Type 1, using control flow activities such as Lookup, ForEach, and Wait.

Beyond core functionality, you’ll discover how to seamlessly integrate Azure Data Factory with a suite of essential tools, including Power BI, GitHub, Azure DevOps, and Azure Key Vault.

These integrations equip you to build a secure and reliable CI/CD process for your data pipelines.

You’ll also gain proficiency in utilizing triggers to automate pipeline execution based on specific events.

The course further enhances its practical value by incorporating hands-on projects that mirror real-world scenarios.

For example, you’ll learn how to incrementally copy new and changed files, implement full and incremental loads for single tables, and configure custom email notifications using Azure Logic Apps.

By the course’s completion, you’ll possess the skills to build, deploy, and maintain data pipelines using Azure Data Factory, along with best practices for implementing effective CI/CD strategies.

While the course provides a solid foundation in Azure Data Factory, you might find the pace of the material varies.

The course’s strength lies in its breadth of coverage and its focus on practical applications.

However, you may need to supplement your learning with additional resources for deeper dives into specific topics.

Azure Data Factory : from Zero to Hero of Azure Data Factory

Best Udemy course for data engineers and analysts seeking comprehensive Azure Data Factory training.

This course offers a comprehensive exploration of Azure Data Factory (ADF), guiding you from foundational concepts to advanced techniques.

You’ll begin by getting acquainted with the fundamentals of cloud computing and setting up your own Azure environment.

The course then delves into the building blocks of ADF, including pipelines, activities, linked services, datasets, and triggers.

You’ll learn to copy data from Blob to ADLS, manage multiple files, and apply sophisticated filters.

The curriculum covers advanced data manipulation techniques, such as implementing parameterized components, leveraging variables, and mastering control flow activities like “Filter,” “For each,” “Lookup,” and “Wait.”

You’ll even tackle practical use cases, like bulk copying files with lookup tables and saving pipeline run details for audit purposes.

Get ready for a deep dive into Mapping DataFlows, where you’ll master source data validation, schema drift handling, and error row management.

You’ll explore a wide range of transformations, including Select, Filter, Conditional split, Union, Exist, Sort, Rank, Aggregate, Join, Pivot, Lookup, Derived column, New branch, Surrogate key, Flatten, Alter row, and Assert.

The course also covers advanced concepts like expression language, dynamic queries, and triggers.

The course doesn’t stop at theory.

You’ll gain practical skills through real-world scenarios, including implementing fact and dimension tables, understanding slowly changing dimensions (SCD), and mastering SCD Type 1 and Type 2 implementations.

You’ll tackle real-world use cases like copying files modified within the last day, bulk copying, dynamically appending source filenames during copy, and implementing incremental loading.

The curriculum is well-structured, covering a wide range of concepts from the basic to the advanced.

Azure Data Factory +Synapse Analytics End to End ETL project

Best Udemy course for data professionals seeking to enhance their skills in building end-to-end ETL projects using Azure Data Factory and Synapse Analytics.

This course provides a comprehensive deep dive into building end-to-end ETL (Extract, Transform, Load) projects using Azure Data Factory and Synapse Analytics.

You’ll be guided through real-world scenarios, mastering key skills in data ingestion, transformation, loading, orchestration, and reporting.

The course starts with a thorough environment setup, covering the creation of Azure Data Factory, Azure Datalake Storage Gen2, and Azure Synapse Analytics Workspace.

You’ll gain valuable insights into creating a budget for your project and optimizing costs for Azure SQL Database.

Data ingestion is tackled in detail, introducing you to various integration runtimes, including the critical self-hosted integration runtime.

You’ll practice copying data from on-premise file storage to Azure Datalake and learn effective methods for incremental data loading based on file modification dates and names.

The course then delves into the critical area of data transformation.

You’ll explore Azure Synapse Analytics, create a Spark pool, and leverage PySpark within notebooks to transform data.

Practical exercises include removing duplicates, handling null values, and creating new columns based on specific conditions.

You’ll also learn to change data types and write transformed data back to Datalake.

Data loading is covered next, with instructions on connecting to Azure SQL Database using SSMS and loading the transformed data.

You’ll practice copying data from Datalake to the SQL database and learn how to troubleshoot common errors.

The course goes beyond the basics by focusing on project enhancements.

You’ll learn how to optimize data copying from on-premise to Datalake and implement strategies for transforming only the latest data files in Synapse notebooks.

Orchestration, a vital skill for automated processes, is covered extensively.

You’ll learn to create automated pipelines using Azure Data Factory and implement email notifications for pipeline failures.

You’ll also master the orchestration of pipelines for efficient data loading into SQL Database.

Finally, the course introduces you to data reporting using Power BI, offering hands-on experience in creating reports and visualizations.

You’ll also be introduced to the concept of Continuous Integration Continuous Deployment (CICD) for Azure Data Factory, learning how to automate the build and deployment process for your project.

This course is particularly well-suited for data professionals seeking to enhance their skills in building and managing data pipelines within the Azure ecosystem.

The hands-on approach and focus on practical applications make it a valuable resource for individuals seeking to gain real-world experience in data engineering with Azure Data Factory and Synapse Analytics.

Masterclass on Azure Data Factory - Data Engineer for 2024

Best Udemy course for beginners who want to master Azure Data Factory fundamentals and advanced pipeline activities.

You’ll start by grasping the foundational elements of ADF, including creating a data factory, understanding Resource Groups, and exploring core concepts like Linked Services and Datasets.

This strong foundation is crucial for connecting to and managing your data sources.

The course delves into practical aspects, such as working with Blob Storage, a key component for storing data within Azure.

You’ll master the use of variables and parameters for dynamic data manipulation, and gain proficiency in JSON structure, which forms the backbone of ADF configurations.

The curriculum covers a wide array of pipeline activities, empowering you to create intricate data workflows.

You’ll learn how to utilize the Copy Activity to transfer data between different storage locations, and the Set Variable Activity to dynamically assign values.

You’ll also master activities like Delete, Execute Pipeline, Fail, Get Metadata, Lookup, and Wait, which are essential for controlling the flow and logic of your data pipelines.

The course then transitions into advanced topics like conditional and loop activities.

You’ll learn to leverage powerful tools like the Switch, Filter, ForEach, and Until activities, allowing you to build adaptable and sophisticated data pipelines.

You’ll also gain hands-on experience with Data Flow Transformations, including Conditional Split, Exists, Union, Join, Aggregate, Surrogate Key, Select, Lookup, Derived Column, Pivot, Unpivot, Rank, Window, Flatten, and Filter transformations, which provide the means to manipulate and transform data within your pipelines.

The course further extends your knowledge by introducing you to real-world scenarios.

You’ll learn to dynamically copy files between storage locations, fetch file names from folders, and handle specific file patterns.

You’ll also delve into ADF pipeline logging, learn how to process CSV files, and understand techniques for copying missed files.

The course seamlessly integrates with other Azure services, highlighting the power of a comprehensive ecosystem.

You’ll learn how to leverage Azure Logic Apps for email notification, enabling you to receive timely updates about your pipeline execution.

Additionally, you’ll discover the capabilities of Azure Databricks, including setting up JDBC connections to SQL Server, mounting data from Azure Data Lake Storage (ADLS) or Blob storage, and reading CSV files with schemas and headers.

Introduction To Azure Data Factory

Best Udemy course for beginners who want to learn Azure Data Factory fundamentals.

This course provides a solid foundation for building and managing data pipelines within Azure Data Factory.

You’ll jump right into the action, starting by setting up your own Azure Free Tier account and getting acquainted with the core concepts of Azure Data Factory.

The hands-on approach is a standout feature.

You’ll gain practical experience by creating an Azure SQL Database, an Azure Storage Account, and your very own Azure Data Factory instance.

This hands-on experience is crucial, as you’ll learn how to connect to your newly built SQL Database and execute SQL statements, getting a clear grasp of data flow within your pipelines.

You’ll master the creation of Linked Services, which are essential for connecting your data sources and sinks.

This includes configuring connections to your SQL database and Azure Storage Account.

Next, you’ll delve into defining Data Sets, the blueprints for the data you’ll be moving.

Finally, you’ll build Pipelines, the blueprints for your data flow, defining the steps involved.

The course concludes with a bonus section offering additional resources for further exploration of Azure Data Factory.

You’ll be well-equipped to integrate data from diverse sources and transform it for analysis, reporting, and other critical applications.

![[8 Course BUNDLE]: DP-203: Data Engineering on MS Azure](/img/best-azure-data-factory-courses-udemy/3150056_8CourseBUNDLEDP-203DataEngineeringonMSAzure.jpg)