Data engineering is a rapidly growing field that focuses on designing, building, and maintaining the systems that collect, process, and store vast amounts of data.

Data engineers play a crucial role in ensuring that data is accessible, reliable, and ready for analysis by data scientists and other stakeholders.

By learning data engineering, you can gain valuable skills that are in high demand across various industries, enabling you to build robust data pipelines, optimize data storage, and contribute to data-driven decision-making.

Finding the right data engineering course can be overwhelming, especially with the abundance of options available online.

You’re looking for a comprehensive program that covers the core concepts, provides practical experience, and prepares you for real-world challenges in the field.

You want a course that’s engaging, taught by industry experts, and fits your learning style and career goals.

For the best data engineering course overall, we recommend the Data Engineering Foundations Specialization on Coursera.

This specialization provides a solid introduction to the fundamental concepts and tools of data engineering, covering topics like data modeling, data warehousing, big data processing, and data pipelines.

It’s a great starting point for anyone new to the field, offering a comprehensive overview of the data engineering landscape.

While the Data Engineering Foundations Specialization is our top pick, there are other excellent courses available that cater to different learning preferences and career aspirations.

Keep reading to explore our curated list of recommendations, including courses focusing on specific cloud platforms, big data tools, and advanced data engineering techniques.

Data Engineering Foundations Specialization

Provider: Coursera

The Data Engineering Foundations Specialization on Coursera provides a solid starting point for your data engineering journey.

You will learn the basics of data engineering, including the roles of data engineers, data scientists, and data analysts, and how they interact within the data ecosystem.

The courses cover various data structures, file formats, common data sources, and popular programming languages used in the field.

You will explore the data engineering ecosystem, diving into different types of data repositories, including relational databases (RDBMS) like MySQL and PostgreSQL, NoSQL databases, data warehouses, data marts, data lakes, and data lakehouses.

The specialization introduces big data processing tools like Apache Hadoop and Spark, essential for handling massive datasets.

It also covers important concepts like ETL (Extract, Transform, Load), ELT (Extract, Load, Transform), data pipelines, and data integration, crucial for moving and preparing data for analysis.

The specialization guides you through the entire data engineering lifecycle, from architecting data platforms and designing efficient data stores to gathering, importing, cleaning, querying, and analyzing data.

You will also delve into crucial aspects like data security, governance, and compliance, essential for responsible data management.

The program emphasizes hands-on learning.

You will complete labs using relational databases, loading and querying data to solidify your understanding.

Through this specialization, you will develop a strong understanding of Python programming, a cornerstone of data engineering.

You will master the basics of Python, including data types, expressions, variables, and data structures, and explore powerful libraries like Pandas, Numpy, and Beautiful Soup for data manipulation and analysis.

You will also gain practical experience with web scraping and data collection using APIs within Jupyter Notebooks, a popular environment for data scientists.

Data Engineering with AWS Nanodegree

Provider: Udacity

This Udacity Nanodegree program equips you with the essential skills for a successful data engineering career.

You’ll start by mastering data modeling techniques, delving into both relational and NoSQL models.

You’ll learn how to use Apache Cassandra, a powerful NoSQL database, to design and implement effective data models.

You’ll then transition into the world of cloud data warehouses, exploring their architecture, technologies, and implementation on AWS.

You’ll gain hands-on experience with ELT (Extract, Load, Transform) techniques, learning how to build and manage data warehouses in the cloud using AWS services.

Next, you’ll explore Spark and data lakes, where you’ll learn how to process massive datasets efficiently.

You’ll become familiar with the big data ecosystem, understand the concepts behind data lakes, and master the fundamentals of Spark, a powerful distributed processing framework.

You’ll gain practical experience by using Spark on AWS to ingest and organize data within a lakehouse architecture.

Finally, you’ll learn how to automate data pipelines, a crucial skill for any data engineer.

This section covers the core concepts of data pipelines, teaches you how to use Airflow, a popular workflow management system, and guides you through implementing data pipelines on AWS.

You’ll also delve into data quality, understanding its importance and learning how to ensure it within your data pipelines.

Throughout the program, you’ll be applying your skills to practical projects, using real-world datasets to build a portfolio showcasing your abilities.

Data Engineering with Microsoft Azure Nanodegree

Provider: Udacity

This Nanodegree program equips you with the skills to design data models using both relational and NoSQL databases like Apache Cassandra.

You learn about cloud data warehouses, mastering the principles of ELT (Extract, Load, Transform) and implementing data warehouses in Azure for real-world data analysis, such as analyzing bike share data.

You dive into the world of big data with Spark, a powerful framework for processing large datasets.

You discover how to wrangle data, debug and optimize Spark applications, and leverage the capabilities of Azure Databricks for building data lakes and lakehouses.

The program guides you through constructing a data lake for bike share data analytics using Azure Databricks.

You explore the creation of data pipelines using Azure Data Factory, learning about crucial components and mastering data transformation techniques within these pipelines.

You understand how to guarantee data quality and implement pipelines in a production environment.

You gain practical experience by constructing data integration pipelines for real-world scenarios like NYC Payroll Data Analytics.

The program also helps you refine your resume, optimize your LinkedIn profile, and leverage your GitHub account to highlight your newly acquired expertise.

Apache Spark 3 - Spark Programming in Python for Beginners

Provider: Udemy

This data engineering course begins with the fundamentals of big data and data lakes.

You will explore the history of Hadoop, delve into its architecture, and understand how it evolved.

You will learn what Apache Spark and Databricks Cloud are and how they play a crucial role in big data processing.

The course guides you through setting up your development environment, either locally using an IDE like PyCharm or in the cloud using Databricks Community Cloud.

You will create your first Spark application and dive into core concepts like Spark DataFrames, Spark Tables, and Spark SQL for data manipulation and analysis.

You will then explore Spark’s architecture and execution model.

You will learn about various execution methods, including running Spark programs in local mode, on a cluster, or using Spark submit.

You will understand Spark’s distributed processing model, different execution modes, and how Spark efficiently processes vast amounts of data.

You will gain a deep understanding of how Spark works under the hood.

You will learn how to create a Spark project, configure application logs, work with Spark Sessions, and understand how DataFrames interact with partitions and executors.

The course covers Spark Jobs, Stages, Tasks, and how to interpret execution plans, giving you a comprehensive understanding of Spark’s inner workings.

Finally, you will apply your knowledge to a real-world capstone project.

This project involves transforming data using Spark, integrating with Kafka for real-time data streaming, and setting up a CI/CD pipeline for automated deployment and testing.

This practical experience solidifies your understanding and equips you to build and deploy your own Spark applications.

The Ultimate Hands-On Hadoop: Tame your Big Data!

Provider: Udemy

This course starts you off by teaching you about the Hadoop ecosystem, including important parts like HDFS and MapReduce.

You even get to install the Hortonworks Data Platform Sandbox on your own computer.

This means you can set up a Hadoop cluster without needing a bunch of machines.

Once you have that set up, you’ll learn how to use HDFS, the storage system for Hadoop, and then how to analyze that data using MapReduce.

You will then discover how to work with data using Pig, a special language designed to make analyzing large amounts of information easier.

You’ll even use Pig to solve real problems, like figuring out the oldest 5-star movie in a database of movies.

Next, you’ll learn Spark, which is a powerful tool for quickly analyzing very large datasets.

The course doesn’t just stop at the basics.

You’ll also learn how to integrate what you learned with other common database tools you might encounter in a company.

You will work with relational databases, like MySQL, and learn how they are different from NoSQL databases, such as HBase and Cassandra.

You’ll also learn how to use specialized tools for asking questions about your data, such as Drill, Phoenix, and Presto.

Finally, you’ll discover how to handle data streaming, which is how to deal with data that is continuously being generated, such as website traffic.

You’ll learn about tools like Kafka and Flume, as well as how to process all that data in real-time using Spark Streaming and Apache Storm.

Throughout the course, you’ll gain practical, hands-on experience by working through activities and exercises, and you’ll even get to design your own Hadoop-based systems to tackle real-world problems.

IBM Data Warehouse Engineer Professional Certificate

Provider: Coursera

The IBM Data Warehouse Engineer Professional Certificate on Coursera equips you with the skills needed to excel in data warehousing.

The program starts with the fundamentals of data engineering, including different data structures, file formats, and the roles of key professionals like data engineers and data scientists.

You’ll then explore big data tools like Apache Hadoop and Spark, along with essential processes like ETL (Extract, Transform, Load) and data pipelines.

The certificate dives deep into relational databases, focusing on popular options like IBM DB2, MySQL, and PostgreSQL.

You’ll learn to create and manage tables using tools like phpMyAdmin and pgAdmin while mastering SQL, the language for database queries.

You’ll start with basic operations like creating, reading, updating, and deleting data, then progress to advanced techniques such as filtering, ordering, and joining data from multiple tables.

You’ll also master Linux commands and shell scripting, essential skills for data engineers.

This includes navigating directories, managing files, installing software, and automating tasks through scripts.

You’ll even learn to schedule cron jobs for recurring tasks.

The program covers database administration, teaching you to optimize databases, implement security measures, and perform backups and restores.

Finally, you’ll apply your knowledge to a real-world data warehousing project, designing and building a data warehouse using what you’ve learned.

Throughout the certificate, you’ll gain experience with industry-standard Business Intelligence (BI) tools like IBM Cognos Analytics, allowing you to create visualizations and dashboards for data analysis.

DP-203 - Data Engineering on Microsoft Azure

Provider: Udemy

This Udemy course takes you on a journey to becoming a proficient data engineer on the Microsoft Azure platform.

You’ll start with the fundamentals, learning about cloud computing and getting familiar with the Azure Portal.

You’ll even create your own Azure Free Account to start practicing right away.

The course then guides you through the world of data storage.

You’ll gain hands-on experience with Azure Storage Accounts, Azure SQL databases, and Azure Data Lake Gen-2.

You’ll become comfortable with different file formats, understand how to upload data to the cloud, and even visualize it using Power BI.

You’ll then dive into Transact-SQL (T-SQL), the language for communicating with SQL databases.

Through interactive labs, you’ll master essential concepts like SELECT, WHERE, ORDER BY, and GROUP BY clauses.

You’ll also learn about aggregate functions, table joins, primary and foreign keys, and how to use SQL Server Management Studio.

The course doesn’t stop at the basics - it introduces you to Azure Synapse Analytics, a powerful data warehousing service.

You’ll discover how to create Azure Synapse workspaces, explore different compute options, and work with external tables.

You’ll learn how to efficiently load data into your SQL Pool using the COPY command and PolyBase.

The course goes deeper into designing data warehouses, covering concepts like star schema, data distribution, table types, and slowly changing dimensions.

You’ll gain a strong understanding of Azure Data Factory, a service specifically designed for creating data pipelines.

This section covers the entire Extract, Transform, and Load (ETL) process.

You’ll learn about mapping data flows, how to handle duplicate rows in your datasets, and even how to work with JSON data.

You’ll also explore advanced concepts like self-hosted integration runtime, conditional split, and schema drift.

Preparing for Google Cloud Certification: Cloud Data Engineer Professional Certificate

Provider: Coursera

This Coursera program equips you with a solid data engineering foundation on Google Cloud.

You start by grasping the essentials of big data and machine learning, including the data-to-AI lifecycle and core products.

You then differentiate between data lakes and warehouses, understanding their use cases and implementation on Google Cloud.

The program delves into building batch data pipelines, covering methods like EL, ELT, and ETL.

You learn to run Hadoop on Dataproc, utilize Cloud Storage, optimize Dataproc jobs, and build data processing pipelines using Dataflow.

Additionally, you explore tools like Data Fusion and Cloud Composer for managing these pipelines.

You then transition to streaming data pipelines, learning how to use Pub/Sub for handling incoming data and Dataflow for applying aggregations and transformations.

You also discover how to store processed records in BigQuery or Cloud Bigtable for analysis.

The program then introduces machine learning, teaching you how to incorporate it into your data pipelines using AutoML, Notebooks, and BigQuery ML for customized solutions.

Finally, this program helps you prepare for the Professional Data Engineer (PDE) certification exam.

You understand the exam domains, identify your knowledge gaps, and create a personalized study plan to ensure your success.

This comprehensive program gives you a strong data engineering foundation on Google Cloud and prepares you for the PDE certification exam.

Data Architect Nanodegree

Provider: Udacity

This Udacity Data Architect Nanodegree helps you build the skills needed to become a data architect.

You begin with the basics of data architecture, exploring different database types and how to design them.

You then discover how to create a physical database schema, applying your knowledge to design a realistic HR database.

The program guides you through designing data systems for reporting and analysis.

You explore data warehousing, operational data stores, and how these elements fit into a larger data architecture.

You apply this knowledge by designing a data warehouse for tasks like reporting and online analytical processing (OLAP).

You then delve into big data, learning about its characteristics and the frameworks used for ingestion, storage, and processing.

You explore NoSQL databases and design a scalable data lake architecture, equipping you to handle massive datasets.

Finally, you discover the crucial role of data governance, including metadata management, data quality, and master data management.

You even learn by building a data governance system for a fictional company.

The instructors, including Shrinath Parikh and Rostislav Rabotnik, bring real-world experience from companies like Amazon, Google, and Microsoft.

They guide you through practical projects, providing valuable insights to solidify your understanding.

To top it off, you get career support, including guidance on enhancing your LinkedIn profile to stand out in the job market.

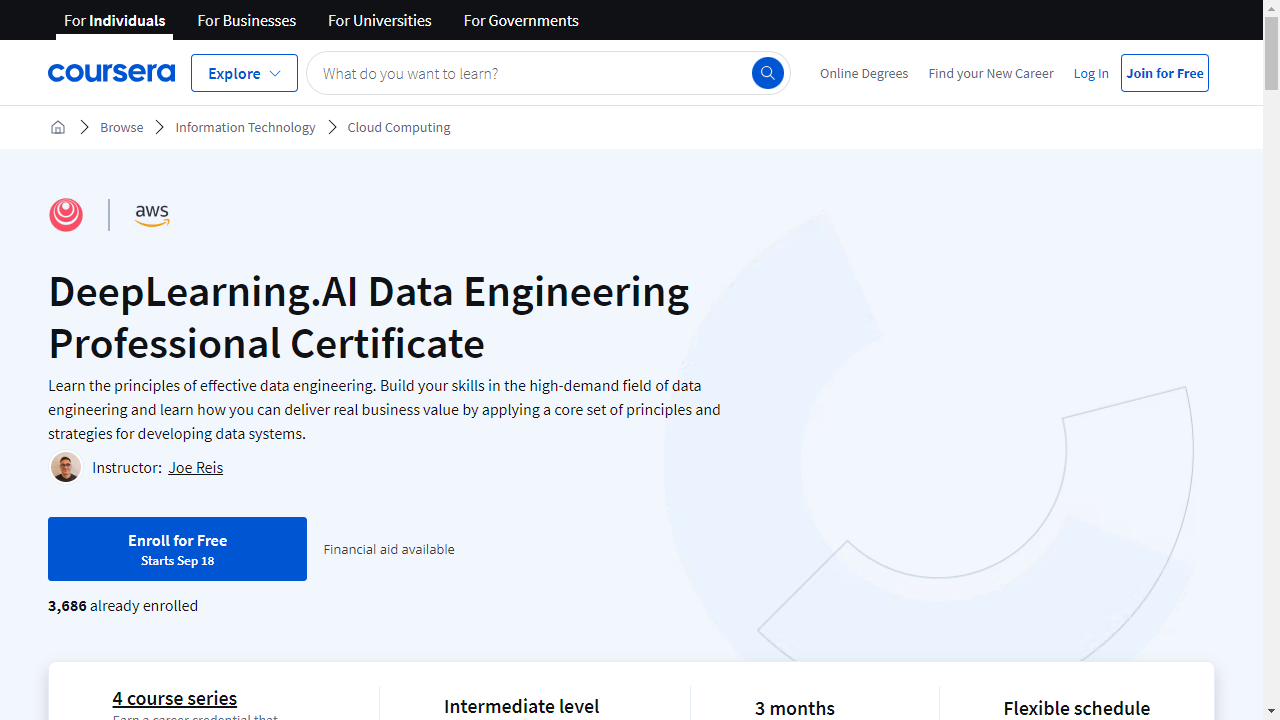

DeepLearning.AI Data Engineering Professional Certificate

Provider: Coursera

This program guides you through the entire data engineering process, from the moment data is born to delivering it into the hands of those who need it.

You’ll begin by understanding how data is generated and learn how to tap into various sources, including the secrets of connecting to real-world systems.

You’ll master the art of building robust data pipelines using powerful cloud tools like AWS, specifically diving into services like S3 and EC2 for storage and processing.

This journey includes learning about different data storage methods, including object storage, block storage, and file storage.

As you progress, you’ll unlock the power of databases like Neo4j, where you’ll wield the Cypher query language to uncover hidden relationships and patterns within your data.

You’ll delve into the fascinating world of data modeling, exploring techniques like normalization, star schema, and data vault.

You’ll become proficient in using dbt for data transformation and explore distributed processing frameworks like Hadoop MapReduce and Spark, essential tools for handling massive datasets.

Finally, you’ll tie everything together by building a capstone project that showcases your newfound data engineering skills.

You’ll graduate with a solid understanding of data storage, the ability to design efficient data pipelines on AWS, and the expertise to model and transform data for both insightful analytics and powerful machine learning applications.

Also check our posts on: