NVIDIA is a leading technology company known for its powerful GPUs (Graphics Processing Units) that are widely used in various fields, including artificial intelligence, deep learning, and high-performance computing.

If you’re interested in learning about NVIDIA’s technologies and their applications, finding a good course can be a challenge.

There are many options available online, but you want a course that’s both comprehensive and engaging, taught by experts, and tailored to your learning style.

For the best NVIDIA course overall on Coursera, we recommend “AI Infrastructure and Operations Fundamentals” by NVIDIA.

This course provides a comprehensive overview of AI’s role in data center operations, covering topics such as AI fundamentals, GPU technology, data center infrastructure, and NVIDIA’s Colocation Program.

The course also provides practical insights into the design and implementation of AI systems, equipping you with the skills needed to manage AI workloads efficiently.

This is just the tip of the iceberg when it comes to quality NVIDIA courses on Coursera.

Keep reading to discover more options, tailored to specific areas like networking, RDMA programming, and deep learning.

We’ll guide you through courses that meet your specific interests and learning goals, helping you find the perfect NVIDIA course on Coursera.

AI Infrastructure and Operations Fundamentals

Provider: NVIDIA

This course is tailor-made for individuals keen on exploring the intersection of Artificial Intelligence (AI) and data center operations.

The journey begins with an overview of AI’s role in the future of data centers, setting the stage for the transformative power of AI in enhancing data center efficiency and capability.

This overview provides a solid foundation, preparing you for the more detailed exploration to come.

A key part of the course is the introduction to AI, designed to demystify AI concepts in a clear and accessible manner.

Whether you’re new to AI or looking to refresh your knowledge, this section ensures you grasp the fundamentals of AI applications.

A significant focus is placed on GPUs (Graphics Processing Units), a critical component in AI processing.

Given NVIDIA’s leadership in GPU technology, you’ll gain insider insights into GPU functionalities and NVIDIA’s specific GPU software.

This knowledge is crucial for anyone aiming to specialize in AI tasks that require intensive data processing.

As the course progresses, it delves into technical specifics crucial for data center AI integration, including server considerations, AI clusters, and storage and networking essentials.

These sections are vital for understanding the architecture and maintenance of AI infrastructure.

Practical aspects such as reference architectures offer you a blueprint for AI system implementation, while modules on infrastructure provisioning, management, cluster orchestration, and job scheduling equip you with the skills to manage AI workloads efficiently.

The course also covers the management of data center health, including power and cooling strategies, essential for maintaining operational efficiency and sustainability.

An intriguing component is the NVIDIA Colocation Program, highlighting NVIDIA’s collaboration with data centers to optimize AI workloads, providing a real-world perspective on AI deployment.

Concluding with a pathway to the NVIDIA Certified Associate official certification, this course not only educates but also prepares you for career advancement in the tech industry, validating your expertise in AI and data center management.

Introduction to Networking

Provider: NVIDIA

This course offers a comprehensive dive into networking fundamentals, tailored for beginners yet enriching for those with some background knowledge.

The journey begins with the TCP/IP Protocol Suite, the internet’s backbone.

You’ll learn how data travels across networks in a straightforward, understandable manner.

This foundation is critical for anyone aspiring to master networking concepts.

Following that, the course delves into Ethernet Fundamentals.

Ethernet is the universal language of network communication, present in everything from small home setups to vast data centers.

You’ll gain insights into how devices connect and communicate, shedding light on the significance of Ethernet in today’s digital world.

A unique aspect of this course is its focus on Data Center Design Considerations, with a special emphasis on NVIDIA’s contributions.

As NVIDIA stands at the forefront of data center technology, you’ll explore the intricacies of data center design and how NVIDIA’s innovations enhance efficiency and performance.

Offered by NVIDIA, a leader in technological advancements, this course not only equips you with essential networking knowledge but also connects you with the forefront of industry innovations.

It’s structured to be engaging and accessible, ensuring that you walk away with a solid understanding of networking basics and the role of NVIDIA’s technologies in shaping the future.

The Fundamentals of RDMA Programming

Provider: NVIDIA

While focusing on Remote Direct Memory Access (RDMA), the skills gained complement CUDA programming, especially in optimizing data transfer in GPU-accelerated applications.

The journey begins with understanding RDMA’s significance and its core concepts like Memory Zero Copy and Transport Offload, which streamline data transfer and reduce CPU load.

You’ll get acquainted with “verbs,” the essential operations in RDMA, and delve into effective memory management for RDMA tasks.

As the course progresses, it delves into RDMA operations such as Send & Receive, RDMA Write, RDMA Read, and Atomic Operations, each catering to different data synchronization needs.

Detailed lessons on Memory Registration, RDMA Send and Receive Requests, and Request Completions equip you with the knowledge to manage RDMA communications comprehensively.

Practical learning is emphasized, with Visual Studio lessons and numerous code files for hands-on RDMA coding experience.

This approach not only reinforces theoretical knowledge but also enhances your coding skills.

The course thoroughly covers RDMA connection establishment and management, teaching you about the RDMA Connection Manager (CM) and guiding you through setting up and managing RDMA connections using Reliable Connection (RC) protocols.

A highlight is the RCpingpong exercise, which offers practical experience in using RDMA connections effectively, a critical skill for real-world applications.

Upon completion, you’ll possess a deep understanding of RDMA programming, equipped to bypass the OS for quicker data transfers, manage memory for RDMA operations, and establish RDMA connections.

This expertise is invaluable for those aiming to excel in network programming or high-performance computing.

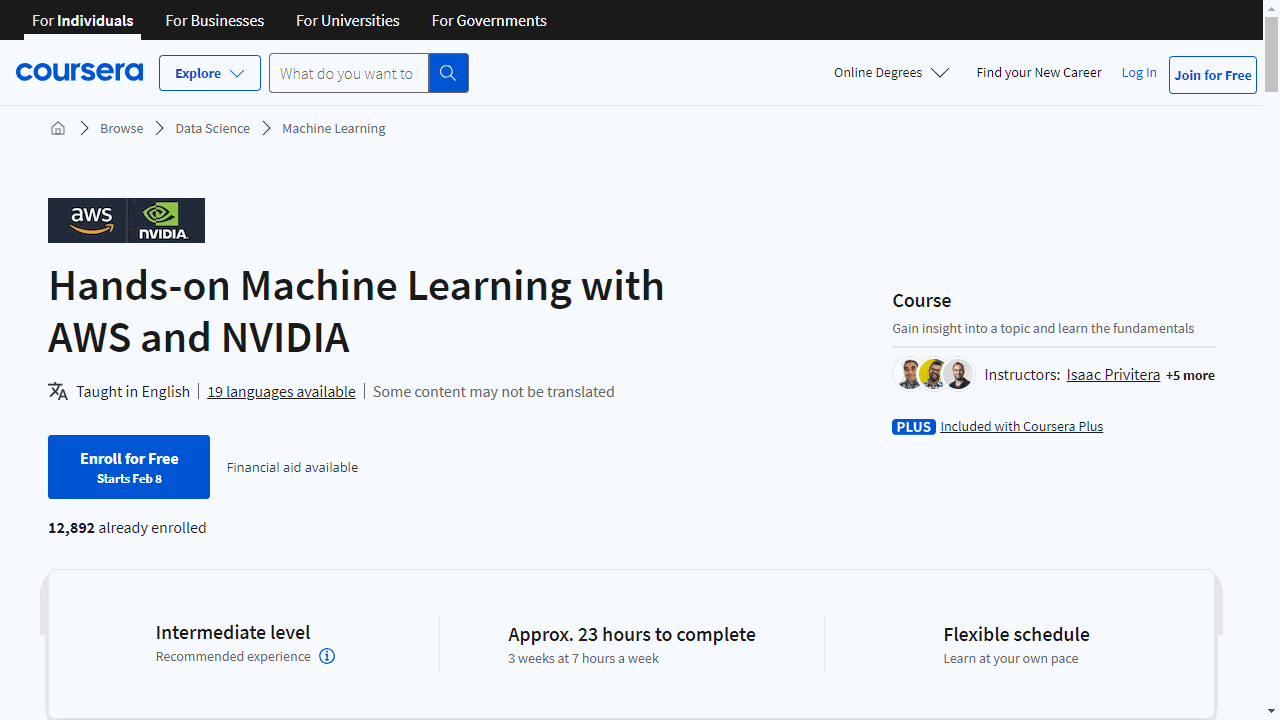

Hands-on Machine Learning with AWS and NVIDIA

Provider: Amazon Web Services

This course guides you through the essentials of Machine Learning (ML) using AWS and NVIDIA’s cutting-edge tools, focusing on practical, real-world applications.

You’ll start with an introduction to ML on AWS and NVIDIA, quickly moving to explore the capabilities of Amazon SageMaker.

This tool simplifies the process of building, training, and deploying ML models, and you’ll learn to leverage GPUs in the cloud for faster data processing.

Amazon SageMaker Studio’s features are also covered, offering you insights into efficient ML project management.

The curriculum is designed to walk you through the ML workflow on Amazon SageMaker, from data preparation and model building to training, tuning, and deployment.

This comprehensive approach ensures you gain hands-on experience with essential ML tasks.

A significant portion of the course is dedicated to NVIDIA GPUs, highlighting their importance in ML for their processing power.

You’ll dive into optimizing GPU performance and explore the NGC catalog, which provides GPU-optimized ML resources.

This knowledge is crucial for enhancing the efficiency of your ML projects.

Real-world applications of ML are also a focus, with discussions on industry solutions and the AWS Marketplace.

This context helps you understand how to apply your skills to solve actual business challenges.

Practical exercises form the core of the course.

You’ll engage with RAPIDS for data science tasks, manage datasets, perform data ETL (Extract, Transform, and Load), and visualize data.

The course also covers model design, training, deployment, AutoML, and Hyperparameter Optimization (HPO), providing a well-rounded experience in model development.

Specialized areas of ML, such as Computer Vision (CV) and Natural Language Processing (NLP), receive detailed attention.

You’ll learn about common CV tasks, delve into object detection, and deploy models using Amazon SageMaker and NVIDIA NGC.

The NLP section introduces you to BERT, teaching you how to fine-tune and deploy BERT models for complex tasks like question answering.

Supplemental readings and encouragement to explore additional NVIDIA Deep Learning Institute courses are provided to deepen your understanding and continue your learning journey.