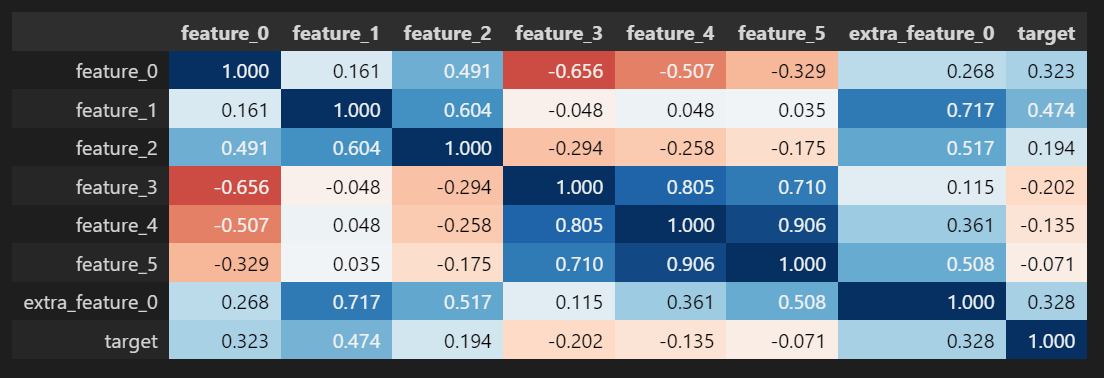

One thing you should be aware of when using marketing mix models is something called multicollinearity.

This happens when two or more input variables in your model are highly correlated, making it tough to interpret the results.

I like to play around with online ads, so I decided to give marketing mix models another try to learn more about them.

I don’t actually have anything to sell, but I thought it would be a fun exercise to create an Instagram ad campaign to try and get more followers.

The thing is, Instagram doesn’t have a specific “get followers” objective, so I had to pick something else.

The closest option was sending people to my page via Link Clicks, which can be a bit unreliable - just because someone clicks on a link and visits your profile doesn’t necessarily mean they’re going to follow you. They might just be clicking around on Instagram for fun.

This situation is very similar to when you’re trying to understand which ad campaigns are most effective at driving sales for a product, but you don’t have (or can’t rely on) multi-touch attribution solutions to track the customer journey.

So I created the campaign and promoted a few of my best carousel posts.

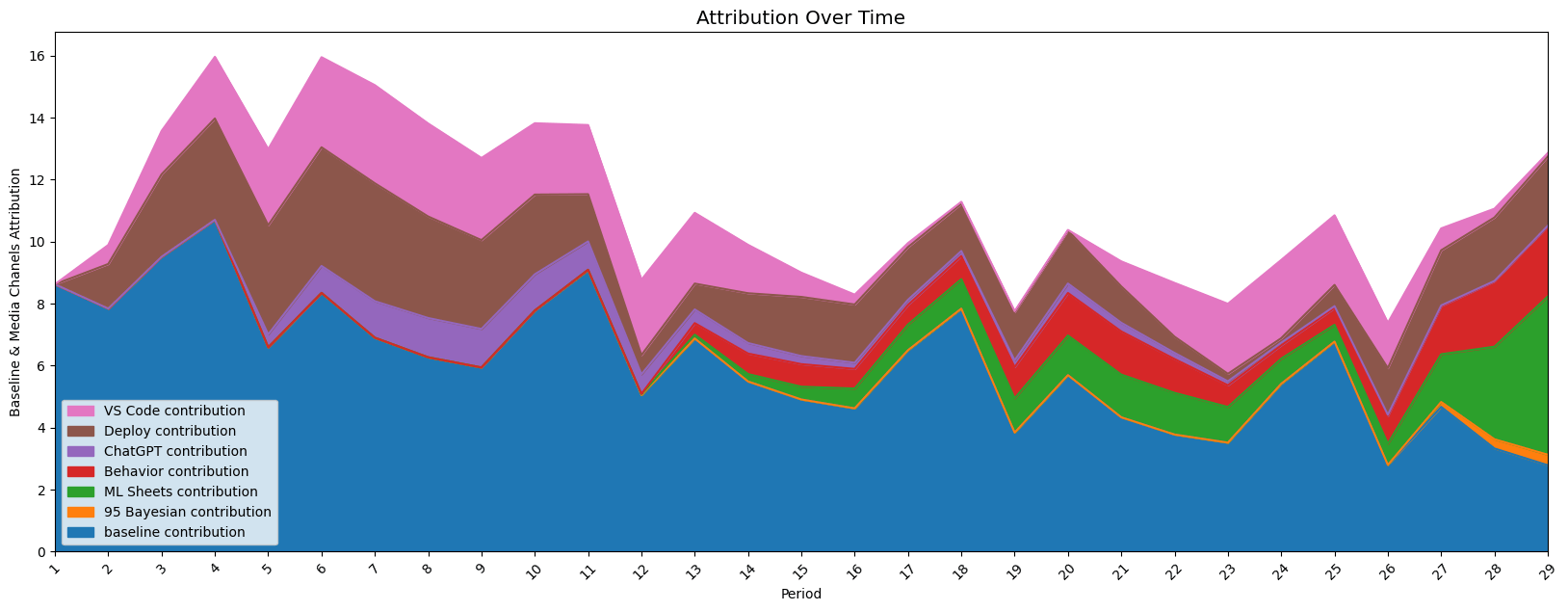

After a few days, I ran LightweightMMM to see which post had the best contribution for followers.

But something felt off - the MMM was showing that most of the followers were coming from the ads, which seemed really unlikely given that my profile normally gets 2-8 new followers without running any paid campaigns.

I ran some diagnostics and sure enough, my features (the impressions of the different Instagram posts) had a really high correlation, which is bad news for linear models like the one in LightweightMMM.

This isn’t a big deal for general accuracy, but we want reliable coefficients if we want to understand the effects of each variable we’re testing.

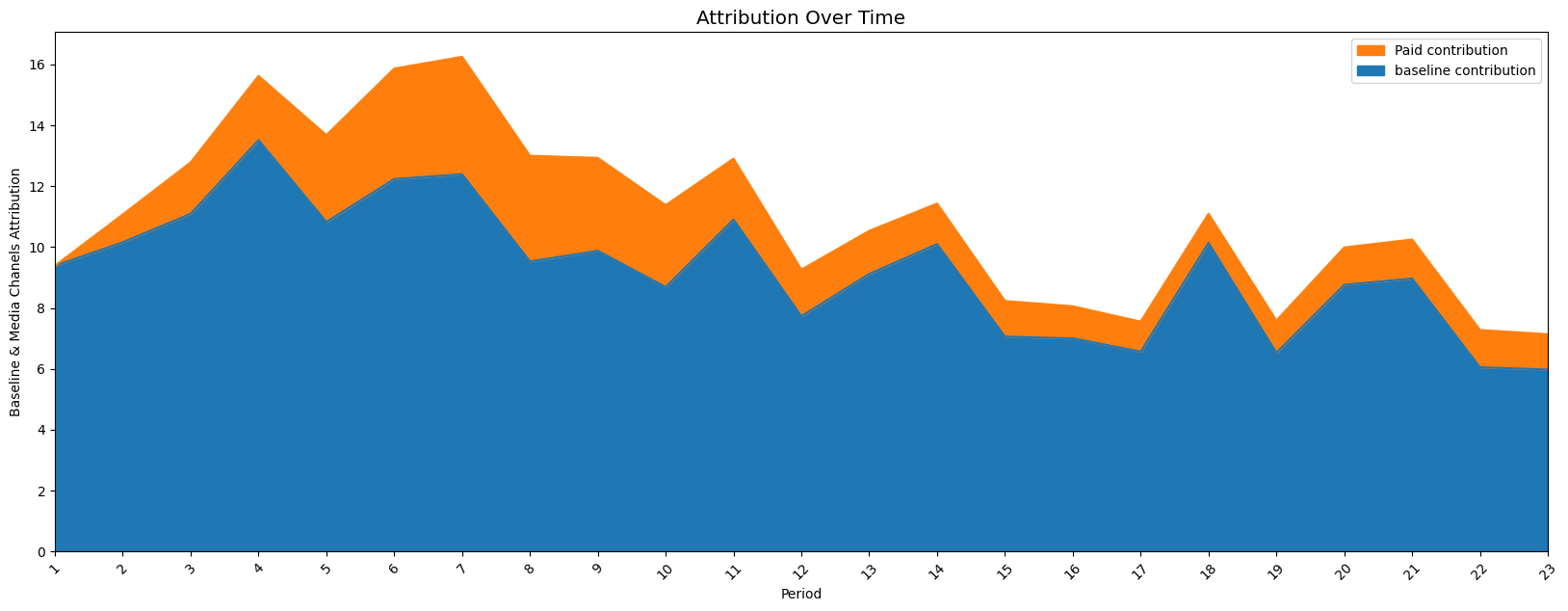

So I used a well-known solution: aggregating all my features into a single “Paid” feature.

That fixed the problem!

The model correctly showed that Paid was only responsible for a small number of new followers.

If I had ignored my intuition and blindly followed the model, I would have believed that most of the new followers came from the ads and wasted money on an ineffective campaign.

But by being critical of the results and checking them against my own experience, I was able to avoid that mistake.

That’s a really important lesson to remember when using marketing mix models (MMMs) or any other kind of statistical model: always check if the results make sense and correspond to your (or the domain expert’s) experience and intuition.

One of the biggest complaints I hear from experienced marketers about MMMs is that they sometimes go against their experience.

All models are inherently flawed and have their limitations. They’re just tools, and they can only give us a certain level of insight into what’s going on.

That’s why it’s so important to approach them with a critical eye and remember the Sagan Standard: “extraordinary claims require extraordinary evidence.”

In other words, be skeptical of any results that seem too good (or too bad) to be true, and make sure you have a solid basis for any conclusions you draw.