There’s ongoing anxiety in our area born from the perceived need to “catch up” with the new shiny state-of-the-art technology.

And yes, it’s good to always be learning new methods to solve problems with machine learning.

But I believe one thing is more important than knowing everything: knowing how to find possible solutions and iterate fast.

This is what I mean by “iterate fast”: having the ability to quickly test different solutions and find the one that works best for your problem.

It’s not about endless research to find the perfect method from the start. It’s about being able to try different things, fail fast, and learn from your mistakes.

Here’s how I do it.

Build a “Quick and Dirty” Model

This one I learned from Andrew Ng and practiced with Kaggle.

(He launched a new version of the awesome Machine Learning course).

When you start a machine learning project, it’s important to have a working prototype as soon as possible.

This means setting up the problem in the simplest way possible and using the most basic method I can think of to solve it.

There is something about having the problem set up that makes it easier to work going forward. It gets you unstuck!

For example, if I’m trying to build a classification model, my quick and dirty model might be a random forest with 1,000 trees and almost no feature engineering and no tuning.

This is likely not the final model I’ll be using, but it’s a good starting point.

From there, I can try different methods to improve the performance of my solution.

Change The Model And Tune The Hyperparameters

Now that you have something working, it’s much easier to move forward and make small improvements.

I always like to find the best model (usually boosted trees or neural networks), tune it, and then add new features.

This way I know I am already at the hyperparameter minima and the improvement really comes from the features, not something I could get simply by tuning more.

Search Similar Solutions

You don’t have to build everything from scratch.

Knowing how to find how other people solved the same or similar problems is an extremely valuable skill.

When I first teamed up with top Kagglers, I was surprised by them starting from whatever solutions they could find on the forums or on Github.

Places where you can look for solutions:

- Github repositories

- Kaggle discussion forums and Kernels

- Blogs

- Google Scholar and Arxiv

It doesn’t need to be a modeling solution, it can be how to build a dataset.

For example, you can find how a sponsor built a dataset to solve the problem in a competition, think about the data sources you have, and reverse engineer the process.

And there is the side effect that you will increase your machine learning knowledge by studying the results, which will make it easier to solve the same problem going forward and look for new solutions.

Rank and Try Ideas

Instead of deciding if you should keep or throw away an idea, rank them.

We never really know which ideas will work better, so I avoid throwing away anything, but this doesn’t mean I try ideas at random.

I usually write down every idea I have, even if it’s crazy. Sometimes I even force myself to come up with 10 ideas every day to try.

Then I rank the ideas by the ones I think have the highest likelihood of working.

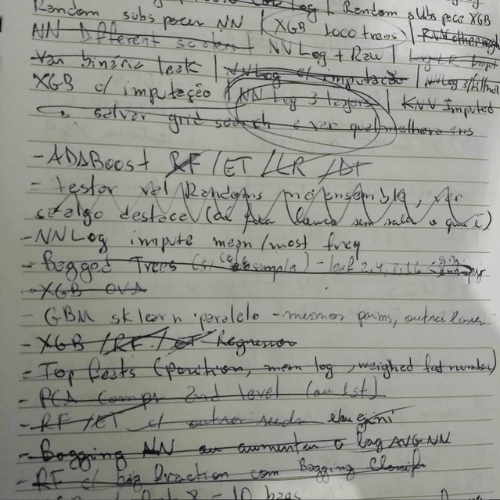

This was one of my lists for the Telstra competition:

I like to re-rank my ideas every day because everything that you try will give you more information about what is likely to work for the specific case you are modeling.

It’s also important to think of how much time you want to spend on each solution. If something doesn’t work as well as you hoped, move on quickly.

Prefer Ideas You Can Implement Fast

It has been my experience, and I heard Owen Zhang talk about it in a lecture, that simple ideas tend to give you the best improvements.

One recent example was subtracting the predictions of the logistic regression in my ensemble to get 3rd place in the Criteo competition.

Simple, easy, and crucial to the result.

This Is The “Secret”

Many people ask me about the secret of top data scientists, Kagglers, etc.

If there are secrets, they are the tips above. Data science is just a series of small improvements.

You need to have something that works as a starting point, then you want to rank your ideas by how much they are likely to improve the results and how easy they are to implement.

And you want to try lots of ideas.

If you do this, you will naturally get better and better at data science.

Of course, these are high-level tips, but I have seen them work again and again in competitions and projects.

The biggest problem is not having enough ideas or knowing what to try.

And remember to keep a journal.

Best of luck in your data science journey!