Apache Spark is a powerful open-source framework designed for distributed data processing.

It excels in handling massive datasets, enabling rapid and efficient analysis, making it ideal for big data applications.

Learning Spark equips you with the skills to process and analyze vast amounts of data, opening doors to rewarding careers in data science, data engineering, and machine learning.

Finding a comprehensive and engaging Spark course on Coursera can be a daunting task, with many options available.

You’re looking for a program that’s both in-depth and practical, taught by experts, and tailored to your learning style and goals.

For the best Apache Spark course overall on Coursera, we recommend the “NoSQL, Big Data, and Spark Foundations Specialization” by IBM.

This specialization provides a well-rounded introduction to NoSQL databases, big data concepts, and Spark.

It covers everything from basic Spark operations to advanced techniques like Spark SQL, making it ideal for both beginners and those seeking to expand their knowledge.

While this specialization is our top pick, there are other great options available on Coursera.

Keep reading to discover more recommendations, tailored to specific areas of Spark, learning levels, and even career goals.

NoSQL, Big Data, and Spark Foundations Specialization

This specialization, offered by IBM, is one of the most popular on Coursera.

The journey begins with “Introduction to NoSQL Databases,” a course that lays the foundation for understanding NoSQL technology.

You’ll explore the evolution of databases and learn about the distinct categories of NoSQL databases, each with its own strengths.

The course emphasizes practical skills, such as performing CRUD operations in MongoDB and managing data in Cassandra, ensuring that you can apply your learning to real-world scenarios.

Moving on to “Introduction to Big Data with Spark and Hadoop,” you’ll delve into the characteristics of big data and the tools used to process it.

This course demystifies the Hadoop ecosystem, introducing you to components like HDFS and MapReduce, and explains how Hive can simplify working with large datasets.

Apache Spark is also covered, giving you insight into its architecture and how it can be used to handle big data more efficiently.

The inclusion of hands-on labs using contemporary tools like Docker and Jupyter Notebooks helps solidify your understanding.

The specialization culminates with “Machine Learning with Apache Spark,” where you’ll bridge the gap between big data processing and machine learning.

This course provides a clear explanation of machine learning concepts and demonstrates how Apache Spark can be leveraged to build predictive models.

Through practical exercises, you’ll gain experience with SparkML, learning to create and evaluate machine learning models.

Apache Spark SQL for Data Analysts

This course is offered by Databricks, which was founded by the creators of Apache Spark.

Apache Spark is at the heart of this course, and you’ll be introduced to Spark SQL, a powerful tool for data processing.

The course doesn’t just skim the surface; it provides a thorough exploration of how to leverage Spark to its full potential.

You’ll gain practical experience by signing up for the Databricks Community Edition, where you’ll learn to navigate workspaces and notebooks – essential skills for any data analyst.

Databricks is used at many top companies, so this is a valuable opportunity to familiarize yourself with a tool that’s in high demand.

The course emphasizes hands-on learning, with exercises like basic queries, data visualization, and an exploratory data analysis lab to solidify your understanding.

As you progress, the course delves into query optimization and cluster management, teaching you how to enhance performance and efficiency.

You’ll discover the importance of caching and selective data loading, ensuring your data processes are as streamlined as possible.

Nested data often poses a challenge, but this course equips you with the skills to manage and manipulate it with confidence.

You’ll also tackle complex data structures using higher-order functions and learn about data partitioning, which are crucial for advanced data analysis.

Delta Lake is a newer concept in data management, and this course dedicates an entire module to it.

You’ll compare data lakes and warehouses, understand the Lakehouse paradigm, and learn how to manage and optimize records within Delta Lake, complete with practical labs to apply what you’ve learned.

To wrap up, the course presents you with SQL coding challenges, allowing you to put your new skills to the test.

These challenges are designed to ensure you’re not just learning concepts but also applying them in real-world scenarios.

Spark, Hadoop, and Snowflake for Data Engineering

This course stands out because it doesn’t just focus on Spark; it provides a well-rounded education in the entire large-scale data engineering ecosystem.

Your journey begins with an introduction to big data platforms by Kennedy Behrman and Noah Gift, your expert instructors.

Their experience in the field ensures that you’re learning practical skills that apply to real-world scenarios.

The course starts with the basics of Hadoop, setting a strong foundation for understanding distributed data processing.

Then, it swiftly moves to Apache Spark, where you’ll delve into Resilient Distributed Datasets (RDDs) and get hands-on experience with PySpark through detailed demonstrations.

PySpark is Python’s interface for Spark, and it’s a powerful tool for data engineering.

Snowflake, a cloud-based data warehousing solution, is also a significant part of the curriculum.

You’ll learn to navigate its interface, manage data, and understand the architecture that sets Snowflake apart from traditional warehousing solutions.

Databricks, a platform optimized for Spark, is another key focus.

The course guides you through its integration with Microsoft Azure, showcasing how to utilize workspaces and implement machine learning workflows with the Databricks MLFlow.

This section is particularly valuable as it ties together data engineering with the growing field of machine learning operations (MLOps).

Beyond the tools, the course introduces you to the principles of MLOps, DevOps, and DataOps, emphasizing the importance of efficient workflows and continuous integration in data projects.

This knowledge is crucial for understanding how data engineering fits into the broader context of software development and operations.

The course also touches on serverless computing, cloud pipelines, and the differences between data warehouses and feature stores, providing a clear picture of the current big data landscape.

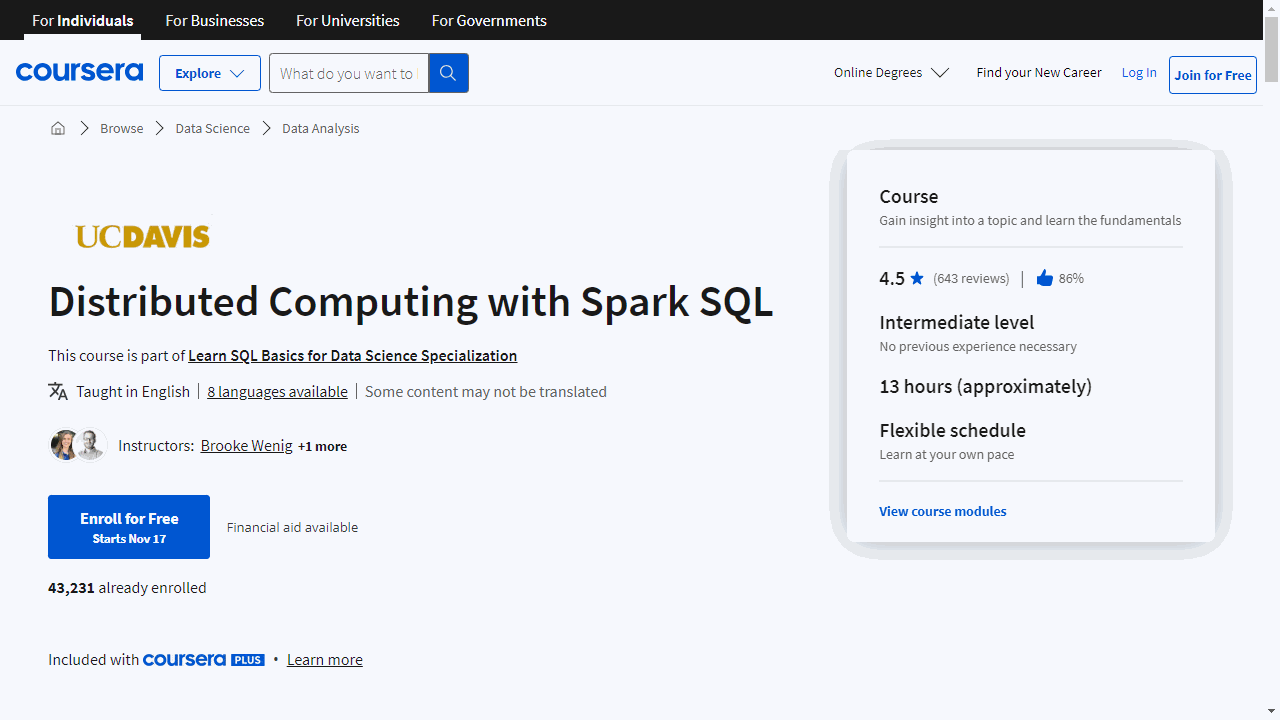

Distributed Computing with Spark SQL

This course is a comprehensive journey into the world of big data through Spark, offering a blend of theory and hands-on practice.

The course begins by laying the groundwork, explaining the significance of distributed computing and how Spark DataFrames can efficiently manage large datasets.

You’ll get acquainted with the Databricks Environment, a powerful platform that simplifies complex data tasks.

SQL integration within notebooks is a highlight, allowing you to execute queries seamlessly as you learn.

Importing data, a fundamental skill, is covered thoroughly, ensuring you’re well-prepared for the practical challenges ahead.

UC Davis’s involvement adds an extra layer of credibility, with curated readings and resources to support your learning.

The assignments are thoughtfully designed to reinforce your understanding, starting with crafting queries in Spark SQL.

As you progress, you’ll delve into Spark’s ecosystem, learning terminology and exploring features like caching and shuffle partitions.

The Spark UI and Adaptive Query Execution (AQE) are introduced, giving you insights into performance optimization.

The second assignment takes you deeper into Spark’s role as a data connector.

You’ll navigate various file formats, understand JSON, and master data schemas.

Writing data, creating tables, and managing views are also covered, equipping you with essential data handling skills.

The course then guides you through the architecture of data pipelines, contrasting data lakes with data warehouses and introducing the Lakehouse concept.

Through practical demos with Delta Lake, you’ll witness cutting-edge features firsthand.

In the final stages, you’ll consolidate your knowledge, reviewing your progress with a final notebook and self-reflection.

The last assignment challenges you to apply everything you’ve learned by constructing a Lakehouse, a testament to the course’s practical focus.

If you’re serious about mastering distributed computing, this course is a solid choice that will help you develop the skills you need in a structured, engaging way.

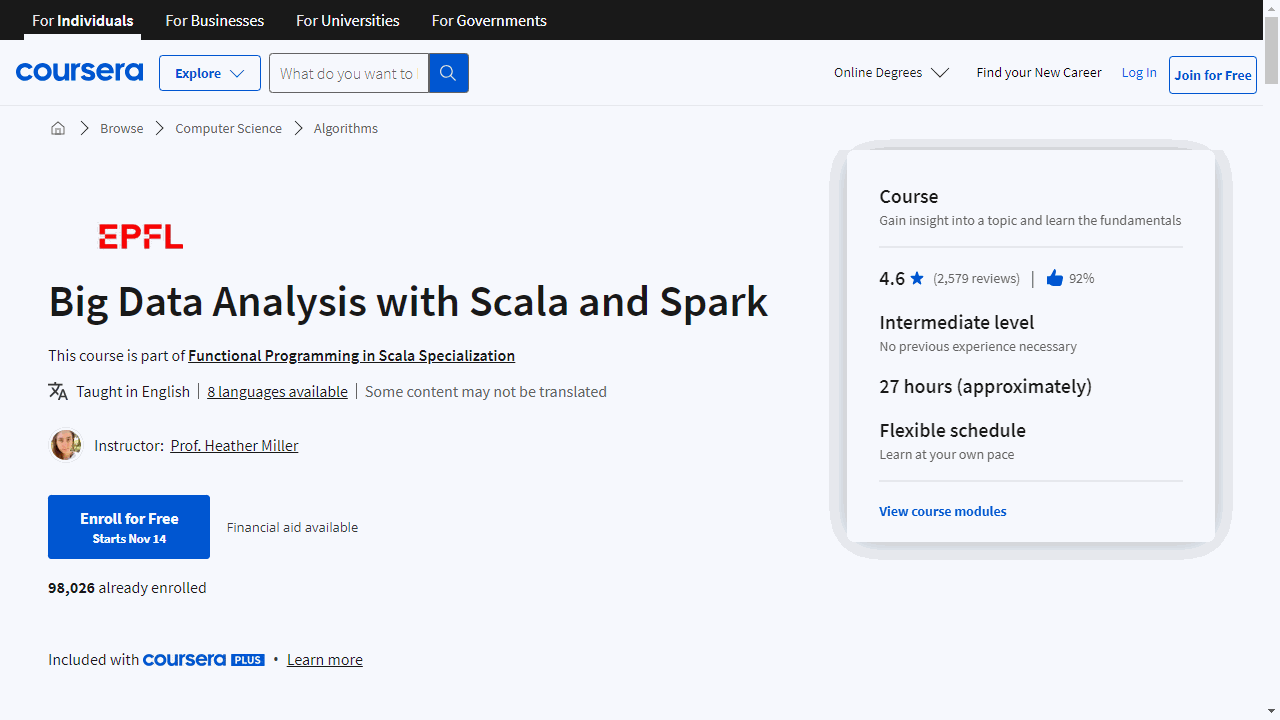

Big Data Analysis with Scala and Spark

This course approaches Spark from a Scala perspective.

Scala is a functional programming language derived from Java, that was chosen as the primary language for Spark.

Starting with an introduction that sets the stage for what’s to come, you’ll quickly move into understanding the shift from data-parallel to distributed data-parallel processing.

This is crucial for working with big data, as it allows you to spread tasks across multiple machines.

Latency can be a big hurdle in data processing, but this course will show you how to tackle it head-on, ensuring that your data analysis is as efficient as possible.

At the core of Spark are RDDs, and you’ll gain a thorough understanding of these.

You’ll learn how to perform transformations and actions, which are fundamental operations in Spark.

Unlike working with standard Scala collections, Spark’s evaluation requires a different approach, and you’ll discover why and how this impacts your data analysis.

Understanding cluster topology is also essential, and you’ll learn why it’s important for optimizing your data processing tasks.

The course will guide you through reduction operations and pair RDDs, giving you the ability to manipulate complex datasets with ease.

Shuffling data and partitioning are topics that often confuse beginners, but they won’t confuse you.

You’ll learn what shuffling is, why it’s necessary, and how to use partitioners to optimize your data processing.

The course also demystifies the difference between wide and narrow dependencies, helping you write more efficient Spark code.

You’ll also explore the handling of both structured and unstructured data, preparing you for any data scenario.

Spark SQL and DataFrames are covered extensively, with two dedicated sessions for DataFrames alone.

This ensures you’ll be comfortable with these powerful tools, enabling you to query and analyze data with the precision of a seasoned data analyst.

Finally, you’ll be introduced to Datasets, Spark’s newest feature, rounding out your skill set and ensuring you’re up to date with the latest in big data processing.

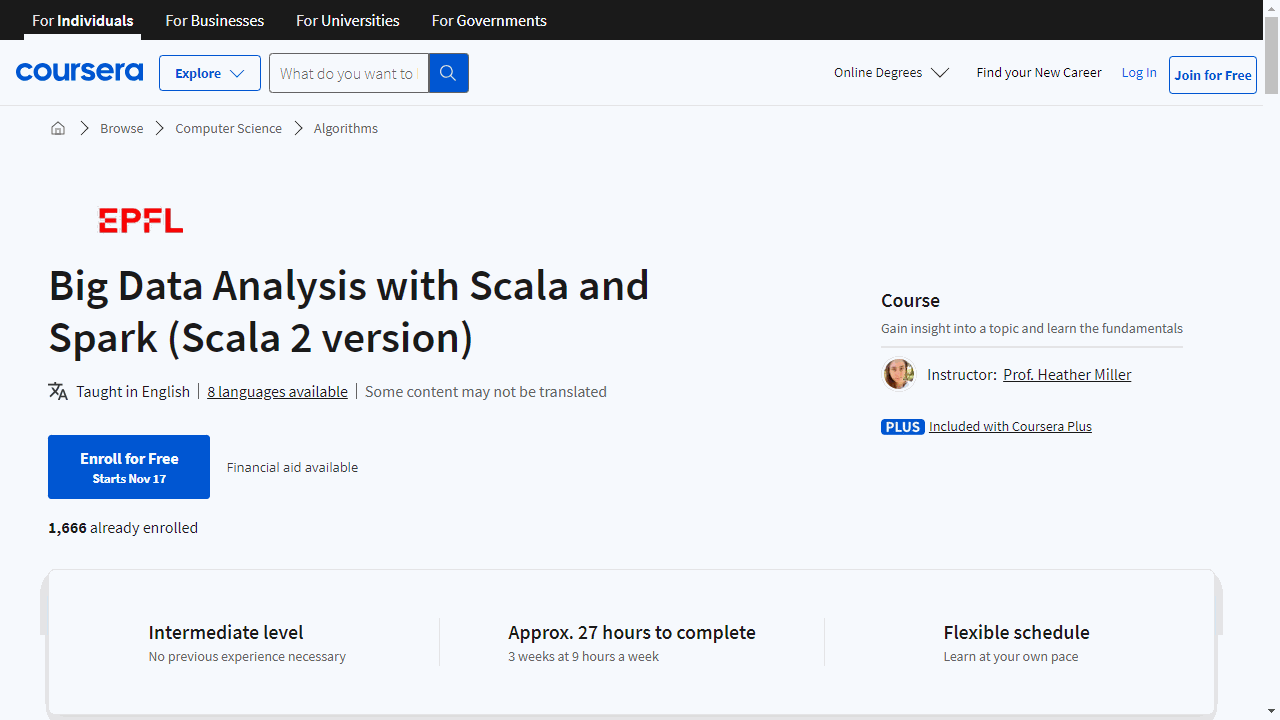

Big Data Analysis with Scala and Spark (Scala 2 version)

The course begins with an introduction that helps you understand the essentials of distributed data-parallel processing, which is crucial for handling large datasets across multiple computers.

One of the key concepts you’ll tackle is latency, learning its significance in data processing and how to minimize it effectively.

This isn’t just theoretical; you’ll see how these ideas play out in real-world scenarios.

RDDs are central to Spark, and you’ll become well-versed in managing these distributed collections.

You’ll discover how to perform transformations and actions on RDDs, which differ from standard Scala collections.

This distinction is important, as Spark has its unique evaluation strategies.

The course also emphasizes the importance of cluster topology, teaching you how the configuration of a computing cluster can impact your data processing tasks.

You’ll get to apply what you’ve learned through practical examples, including an exploration of Wikipedia data, which adds a layer of real-world relevance to your studies.

Tool setup and assignment submission are streamlined, thanks to a clear SBT tutorial included in the syllabus.

This ensures that you can focus more on learning and less on configuration.

As you progress, you’ll delve into more advanced topics such as reduction operations, pair RDDs, and the intricacies of shuffling and partitioning.

These concepts are critical for optimizing Spark’s performance and are explained in a way that’s accessible and applicable.

The course also covers the differences between wide and narrow dependencies, helping you understand their impact on Spark’s efficiency.

You’ll explore both structured and unstructured data, preparing you to handle various data types with ease.

With a focus on Spark SQL and DataFrames, the course introduces powerful tools for data querying and analysis.

You’ll appreciate the convenience these tools offer, making complex data tasks more manageable.

Datasets are also covered, providing you with a hybrid approach that combines the benefits of DataFrames with the type-safety of RDDs.

Finally, the course touches on time management within Spark, teaching you how to optimize your operations to save time and resources.

Frequently Asked Questions

What Is the Relationship Between Apache Spark and Apache Kafka?

Apache Spark and Apache Kafka are both open-source projects from the Apache Software Foundation, but they serve different purposes and are often used together in big data pipelines.

Apache Kafka is a distributed streaming platform that is designed to handle real-time data feeds.

It provides a robust messaging system that allows applications to publish and subscribe to streams of data, making it an excellent choice for building real-time data pipelines and streaming applications.

On the other hand, Apache Spark is a fast and general-purpose cluster computing system for large-scale data processing.

It provides a rich set of libraries for batch processing, real-time stream processing, machine learning, and graph processing.

They can be integrated to build powerful data processing pipelines.

Kafka can act as a reliable source of streaming data, ingesting data from various sources, while Spark can consume and process that data in real-time using its Spark Streaming component or in batch mode using its core engine.

What Is Apache Spark Structured Streaming?

Apache Spark Structured Streaming is a scalable and fault-tolerant stream processing engine that is part of the Apache Spark ecosystem.

It allows you to process live data streams in a continuous and fault-tolerant manner, similar to how you would process batch data with Apache Spark.

Also check our post on the best Apache Spark courses on Udemy.