Data engineering is a crucial field that involves building and maintaining the systems that power data-driven applications and insights.

Data engineers are responsible for designing, developing, and managing data pipelines, ensuring that data is collected, processed, and stored efficiently and reliably.

Learning data engineering can lead to rewarding careers in various industries, including technology, finance, healthcare, and more.

Finding a comprehensive and well-structured data engineering course on Coursera can be challenging, with so many options to choose from.

You might be looking for a program that’s beginner-friendly yet covers a broad range of topics, taught by industry experts and featuring hands-on projects.

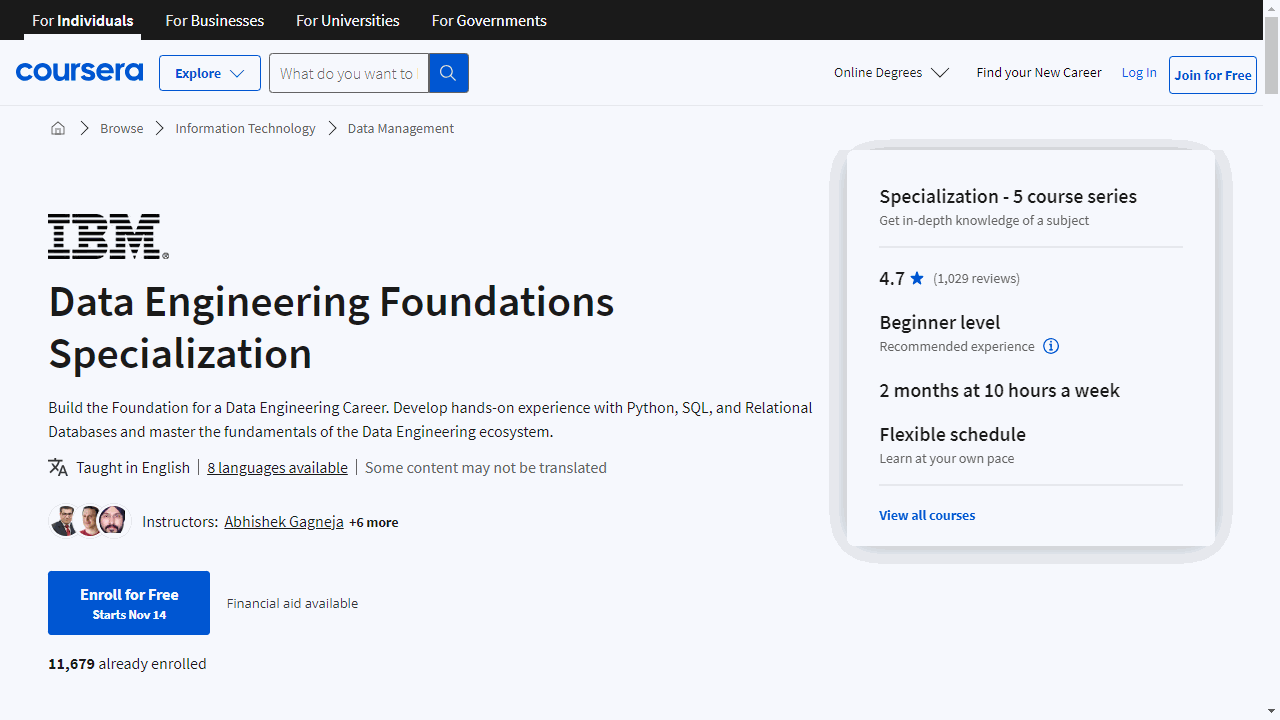

For the best overall data engineering course on Coursera, we recommend the Data Engineering Foundations Specialization.

This program stands out for its well-designed curriculum, comprehensive coverage of core concepts, and practical projects.

It’s ideal for individuals with varying levels of experience, guiding learners from basic concepts to more advanced topics like data pipelines and database management.

This is just one of many excellent data engineering courses available on Coursera.

Keep reading to explore our recommendations for different skill levels, career goals, and specific areas of data engineering.

Data Engineering Foundations Specialization

Embarking on a career in data engineering requires a solid foundation, and the “Data Engineering Foundations Specialization” on Coursera is designed to build that foundation from the ground up.

This set of courses is tailored for those who are eager to step into the rapidly expanding field of data engineering.

The journey begins with “Introduction to Data Engineering,” a course that lays the groundwork for understanding the essentials of the profession.

You’ll explore the roles of data engineers, scientists, and analysts, and learn about the ecosystem in which they operate.

The course covers a variety of data structures and introduces you to relational and NoSQL databases, as well as big data processing tools.

By the end of this course, you’ll have practical experience with data platforms and a clear understanding of data security principles.

If you are new to programming, “Python for Data Science, AI & Development” is an invaluable resource.

Python’s versatility makes it a key language in data engineering, and this course will guide you from the basics to more complex concepts.

You’ll gain proficiency in Python by working with data structures and libraries, and you’ll apply your skills to tasks like data collection and web scraping.

Once you’ve mastered Python, the “Python Project for Data Engineering” course offers a chance to apply your skills in a project-based setting.

This course simulates the role of a data engineer, challenging you to extract, transform, and load data using Python.

It’s an opportunity to demonstrate your abilities and add a substantial project to your professional portfolio.

Understanding databases is crucial for any data engineer, and “Introduction to Relational Databases (RDBMS)” provides a comprehensive look at how data is stored and managed.

You’ll become familiar with various database management systems and learn how to use tools for creating and maintaining databases.

The course culminates in a final assignment where you’ll design and implement a database for a specific analytical need.

Finally, “Databases and SQL for Data Science with Python” deepens your knowledge of SQL, the standard language for interacting with databases.

Starting with fundamental SQL commands, you’ll progress to more advanced techniques, learning how to query data from multiple tables and use SQL in conjunction with Python for data analysis.

The course includes hands-on labs and projects, ensuring that you can apply what you’ve learned to real-world scenarios.

Whether you’re new to the field or looking to formalize your skills, these courses provide a step-by-step path to becoming a proficient data engineer.

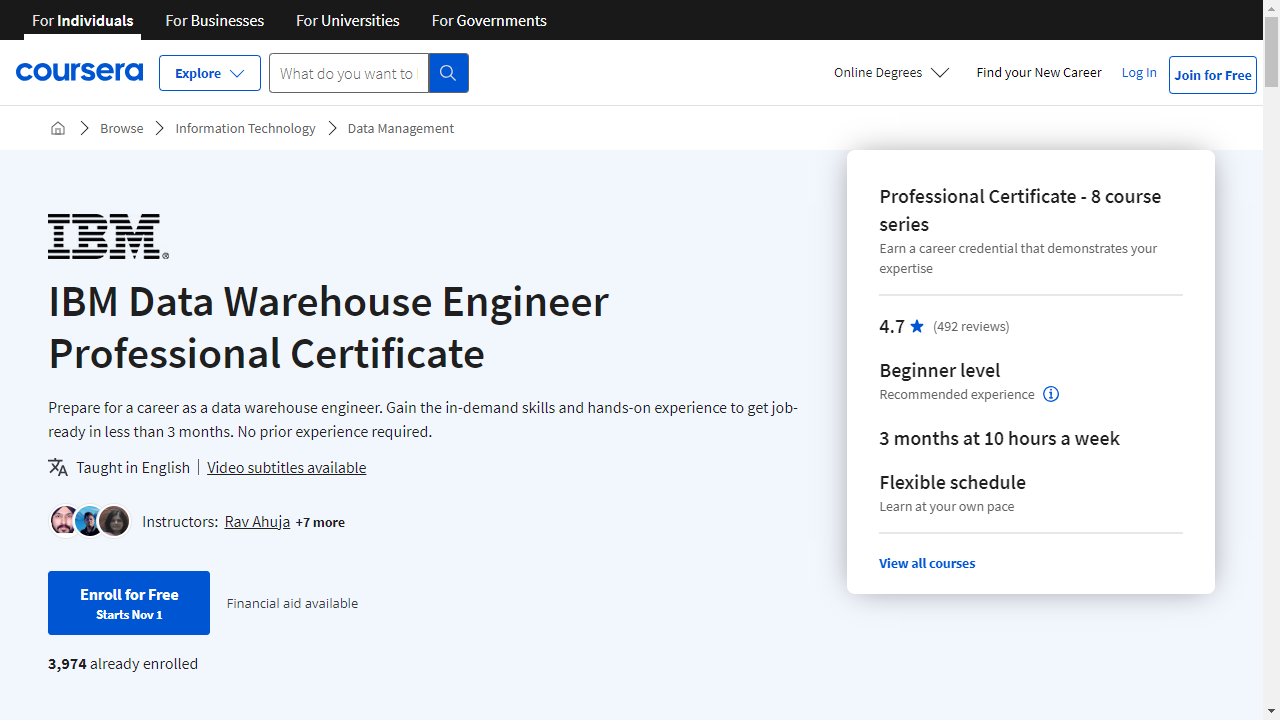

IBM Data Warehouse Engineer Professional Certificate

The first course, “Introduction to Data Engineering,” provides a solid foundation in data engineering, covering everything from data structures and file formats to the roles of data professionals.

The course also introduces you to various types of data repositories and big data processing tools.

Next, the “Introduction to Relational Databases (RDBMS)” course dives deeper into the world of data engineering.

You’ll gain a solid understanding of how data is stored, processed, and accessed in relational databases.

The course also provides hands-on exercises, allowing you to work with real databases and explore real-world datasets.

The “SQL: A Practical Introduction for Querying Databases” course offers a comprehensive introduction to SQL, covering everything from basic CRUD operations to advanced SQL techniques.

The “Hands-on Introduction to Linux Commands and Shell Scripting” course provides a practical understanding of common Linux/UNIX shell commands, which are essential for data engineers.

The course also includes hands-on labs, allowing you to practice and apply what you learn.

The “Relational Database Administration (DBA)” course is a must for anyone interested in database management.

It covers everything from database optimization to security and backup procedures.

The course also includes a final project where you’ll assume the role of a database administrator, providing a real-world application of the skills you’ve learned.

The “ETL and Data Pipelines with Shell, Airflow and Kafka” course is unique in its focus on the two different approaches to converting raw data into analytics-ready data.

You’ll learn about the tools and techniques used with ETL and Data pipelines, and by the end of the course, you’ll know how to use Apache Airflow and Apache Kafka to build data pipelines.

The “Getting Started with Data Warehousing and BI Analytics” course covers everything from the design and deployment of data warehouses to the use of BI tools for data analysis.

The course also includes hands-on labs, allowing you to apply what you learn.

Finally, the “Data Warehousing Capstone Project” course allows you to apply everything you’ve learned in a real-world context.

You’ll assume the role of a Junior Data Engineer and tackle a data warehouse engineering problem.

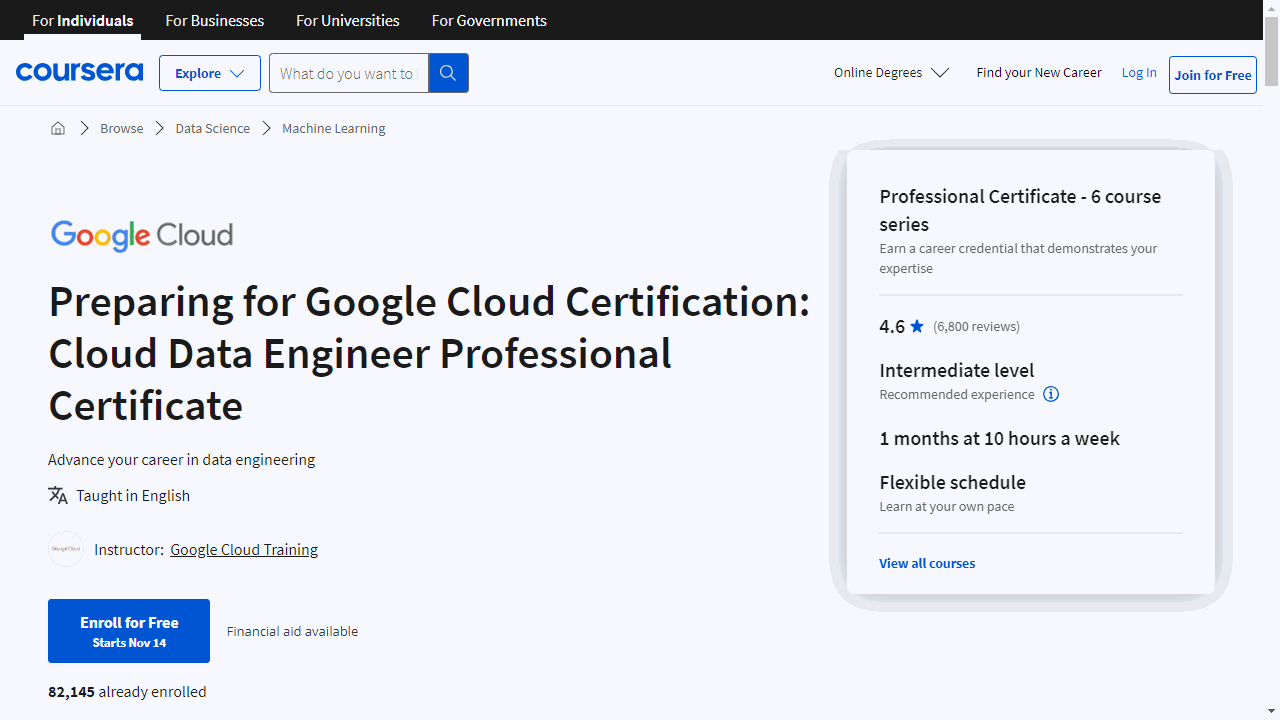

Google Cloud Data Engineer Professional Certificate

This series of courses is designed to equip you with the skills needed to pass the Google Cloud Professional Data Engineer certification exam.

The journey begins with “Google Cloud Big Data and Machine Learning Fundamentals,” where you’ll delve into the essentials of big data and machine learning on Google Cloud.

This course isn’t just about theory; it’s about applying what you learn to real-world scenarios, using tools like TensorFlow and BigQuery to process and analyze large datasets.

As you progress to “Modernizing Data Lakes and Data Warehouses with Google Cloud,” you’ll tackle the distinctions between data lakes and warehouses—knowledge critical for any data engineer.

Understanding these storage solutions and their use cases is key to managing data effectively, and this course provides the technical detail you need to make informed decisions.

In “Building Batch Data Pipelines on Google Cloud,” the focus shifts to data transformation.

Here, you’ll explore various methods for batch data processing, including the use of BigQuery and Dataflow.

The course offers practical experience, allowing you to build your own data pipeline components, a skill that’s invaluable in the field.

For real-time data processing, “Building Resilient Streaming Analytics Systems on Google Cloud” has you covered.

You’ll learn to handle streaming data using Pub/Sub, apply transformations with Dataflow, and store processed records for analysis.

This course is about keeping pace with the data that businesses generate every second, ensuring that your analytics are current and actionable.

“Smart Analytics, Machine Learning, and AI on Google Cloud” is where data engineering meets the future.

This course introduces you to the integration of machine learning into data pipelines, covering both AutoML for ease of use and more customized solutions using Notebooks and BigQuery ML.

It’s a practical approach to making your data work smarter, not harder.

Lastly, “Preparing for your Professional Data Engineer Journey” is the strategic final step.

It’s designed to help you assess your readiness for the Google Cloud Professional Data Engineer certification exam, one of a few credentials that can significantly boost your career prospects.

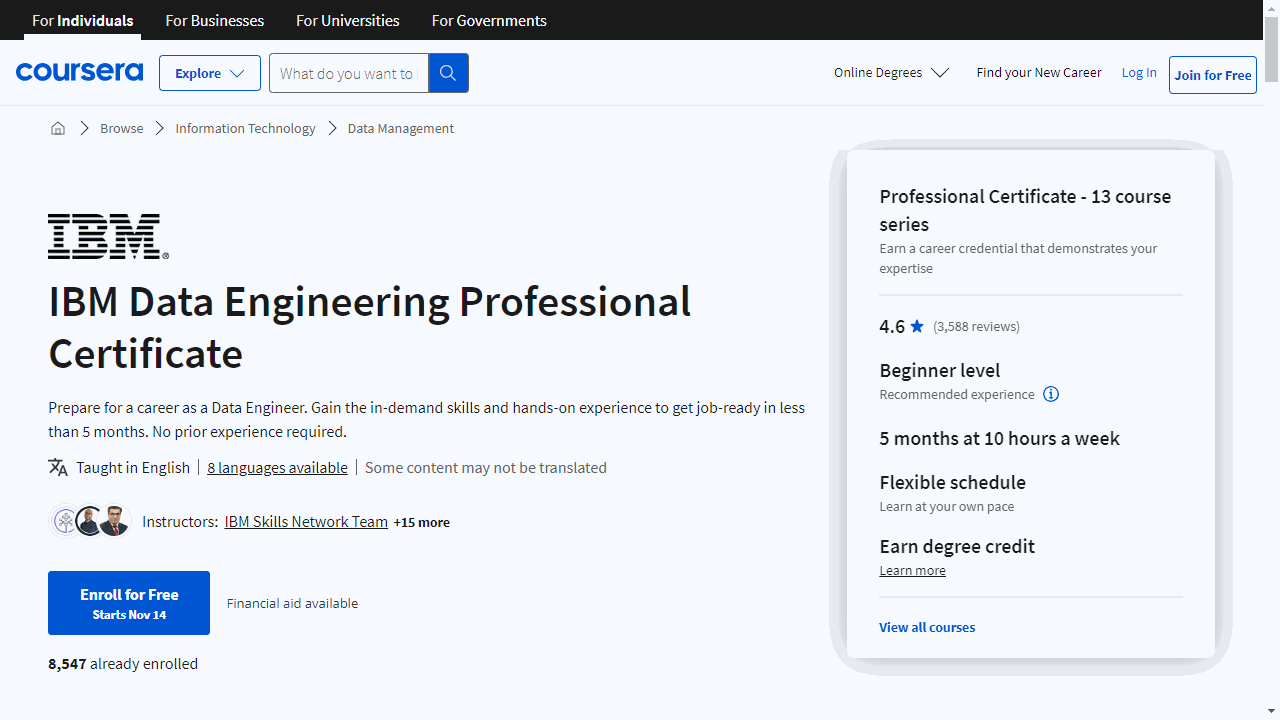

IBM Data Engineering Professional Certificate

Begin with “Introduction to Data Engineering,” where you’ll familiarize yourself with the fundamentals of the field.

You’ll learn about data structures, file formats, and dive into the world of relational and NoSQL databases.

Understanding the data engineering lifecycle, including security and compliance, sets a strong base for your journey ahead.

“Python for Data Science, AI & Development” is where you’ll harness the power of Python, a critical tool in your data engineering toolkit.

You’ll start from the basics and quickly move to more complex concepts, using libraries that are staples in data manipulation and analysis.

This course ensures you’re well-prepared to handle real-world data tasks.

To solidify your Python skills, “Python Project for Data Engineering” offers a hands-on experience where you’ll apply your knowledge to practical data engineering problems.

Completing this project not only boosts your confidence but also enhances your portfolio, showcasing your ability to tackle data challenges head-on.

Databases are the cornerstone of data engineering, and “Introduction to Relational Databases (RDBMS)” provides a comprehensive look into their design and management.

You’ll get comfortable with SQL and database tools, setting you up to efficiently store and retrieve data.

Building on your database knowledge, “Databases and SQL for Data Science with Python” takes you deeper into SQL’s capabilities.

You’ll learn to craft sophisticated queries and work with data in more complex ways, integrating SQL with Python to elevate your data analysis skills.

The command line is an indispensable part of a data engineer’s arsenal, and “Hands-on Introduction to Linux Commands and Shell Scripting” equips you with the necessary command-line proficiency.

You’ll learn to navigate the Linux environment, automate tasks with shell scripting, and manage data processes effectively.

As you progress, “Relational Database Administration (DBA)” teaches you to maintain the health and performance of databases.

You’ll delve into optimization, security, and disaster recovery, ensuring data integrity and availability.

For a contemporary approach, “ETL and Data Pipelines with Shell, Airflow and Kafka” introduces you to modern data pipeline construction.

You’ll explore the nuances of data processing and learn to use cutting-edge tools to build scalable and efficient data pipelines.

In “Getting Started with Data Warehousing and BI Analytics,” you’ll bridge the gap between data storage and business decision-making.

This course covers the design and use of data warehouses and BI tools, enabling you to transform data into actionable insights.

The “Introduction to NoSQL Databases” course broadens your database knowledge by diving into the world of NoSQL.

You’ll explore different NoSQL database types and gain practical experience in managing large, unstructured datasets.

For those interested in big data, “Introduction to Big Data with Spark and Hadoop” is an essential course.

You’ll learn how to process vast amounts of data using distributed computing, preparing you for the challenges of big data analytics.

“Machine Learning with Apache Spark” is where data engineering meets machine learning.

You’ll explore the creation of ML models and discover how to integrate them into data pipelines, a skill that’s increasingly valuable in today’s data-driven world.

Finally, the “Data Engineering Capstone Project” is your opportunity to bring all your newly acquired skills to bear on a real-world scenario.

This project simulates the experience of a data engineer, challenging you to design and implement a comprehensive data analytics platform.

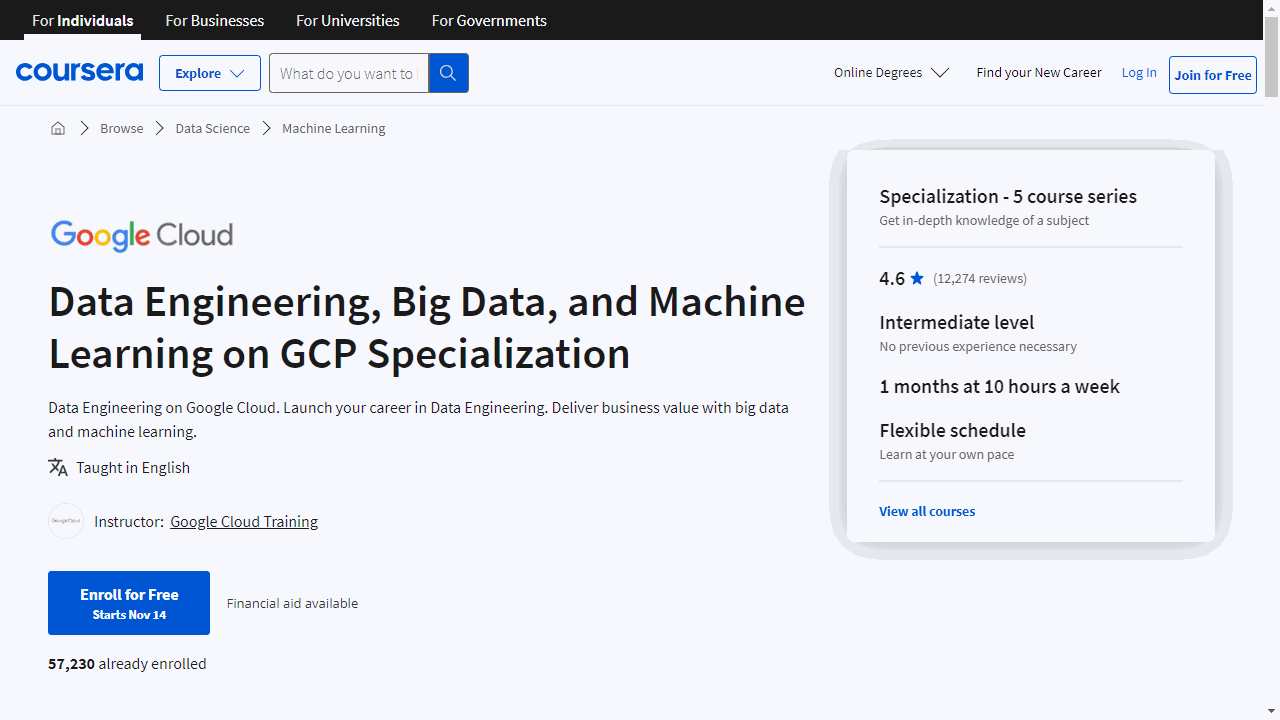

Data Engineering, Big Data, and Machine Learning on GCP Specialization

This series of courses is designed to give you a practical understanding of data engineering on the Google Cloud Platform, a skill in high demand.

The specialization begins with “Google Cloud Big Data and Machine Learning Fundamentals,” which lays the foundation for your journey.

You’ll learn about the data-to-AI lifecycle and how to leverage Google Cloud’s big data and machine learning services.

The course equips you with knowledge on tools like TensorFlow and BigQuery, and you’ll learn to design data pipelines using Vertex AI.

Moving on, “Modernizing Data Lakes and Data Warehouses with Google Cloud” delves into the storage solutions that underpin all data analysis.

Understanding the distinction between data lakes and warehouses is crucial, and this course explains their use-cases and the advantages of cloud-based data engineering.

For those interested in the mechanics of data processing, “Building Batch Data Pipelines on Google Cloud” offers a deep dive into the methodologies of data loading and transformation.

You’ll gain experience with Google Cloud’s suite of data transformation tools, including Dataproc and Dataflow, and learn to manage pipelines effectively.

The specialization also includes “Building Resilient Streaming Analytics Systems on Google Cloud,” which focuses on the real-time aspect of data processing.

You’ll explore how to handle streaming data with Pub/Sub and how to process it for immediate insights using Dataflow, storing the results in BigQuery or Cloud Bigtable.

Lastly, “Smart Analytics, Machine Learning, and AI on Google Cloud” introduces you to the integration of machine learning into data pipelines.

Whether you’re looking to use AutoML for straightforward applications or delve into custom machine learning models with Notebooks and BigQuery ML, this course covers the spectrum of tools available on GCP.

Each course in the specialization provides hands-on experience through Qwiklabs, allowing you to apply what you’ve learned in a controlled environment.

This practical approach is invaluable, ensuring that you’re not just passively absorbing information but actively engaging with the material.

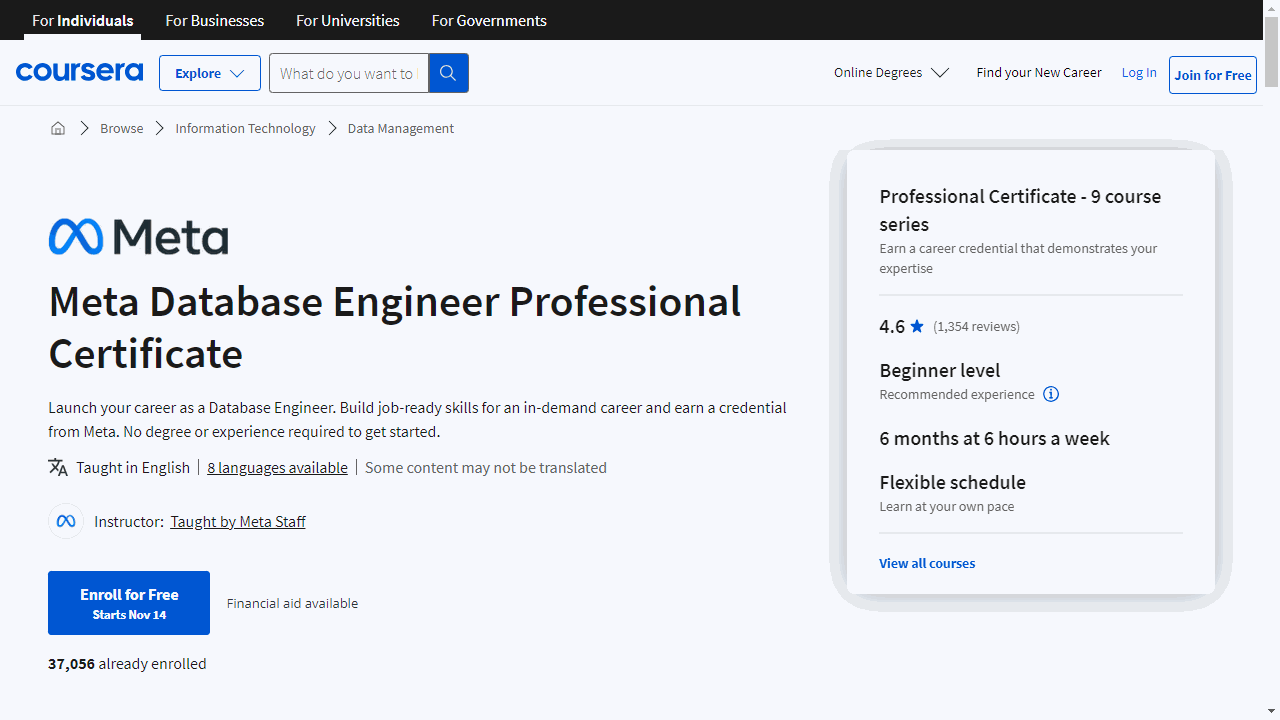

Meta Database Engineer Professional Certificate

The journey begins with “Introduction to Databases,” where you’ll gain a foundational understanding of database principles and get to grips with SQL.

This course sets the stage for what’s to come, ensuring you have a strong base to build upon.

Moving on to “Version Control,” you’ll learn the essentials of collaborative software development.

This course teaches you how to use Git and Linux commands, tools that are crucial for managing complex projects and workflows.

As you progress to “Database Structures and Management with MySQL,” your SQL skills will deepen.

You’ll learn to manipulate databases with more sophisticated queries and understand the importance of database relationships and normalization.

“Advanced MySQL Topics” is where your database skills become more nuanced.

You’ll tackle complex SQL statements, learn to optimize queries, and understand advanced features like triggers and events.

This course is about refining your skills and preparing you for more complex database tasks.

With “Programming in Python,” you’ll step into the world of programming, learning Python from the ground up.

This course is essential for back-end development and database engineering, as Python is a key language in these domains.

In “Database Clients,” you’ll bridge the gap between Python programming and database management, learning to create applications that interact with MySQL databases.

This skill is invaluable for developing custom database solutions.

“Advanced Data Modeling” takes you into the realm of data warehousing and ETL processes.

Here, you’ll learn about the storage, optimization, and administration of large-scale databases, preparing you for challenges in managing big data.

The “Database Engineer Capstone” project is where you’ll apply everything you’ve learned.

You’ll build a database solution from the ground up, demonstrating your ability to tackle real-world problems.

Lastly, “Coding Interview Preparation” will help you navigate the job market.

This course provides insights into the interview process, teaching you how to approach coding problems and communicate effectively.

Throughout the certificate program, you’ll develop a diverse set of skills, including database design, MySQL, Python programming, and more.

The courses are structured to build on each other, ensuring a smooth learning curve.

This professional certificate is accessible to anyone eager to learn, regardless of previous education or experience.