Big data is a rapidly growing field that deals with massive datasets and the tools to analyze them.

As data continues to explode, the need for skilled big data professionals who can extract valuable insights is only growing.

Learning big data opens up a world of opportunities in various industries, from tech to finance to healthcare.

Finding the right big data course on Coursera can be overwhelming.

With so many options available, you’re probably looking for a program that’s comprehensive, engaging, and taught by experts but also fits your learning style and goals.

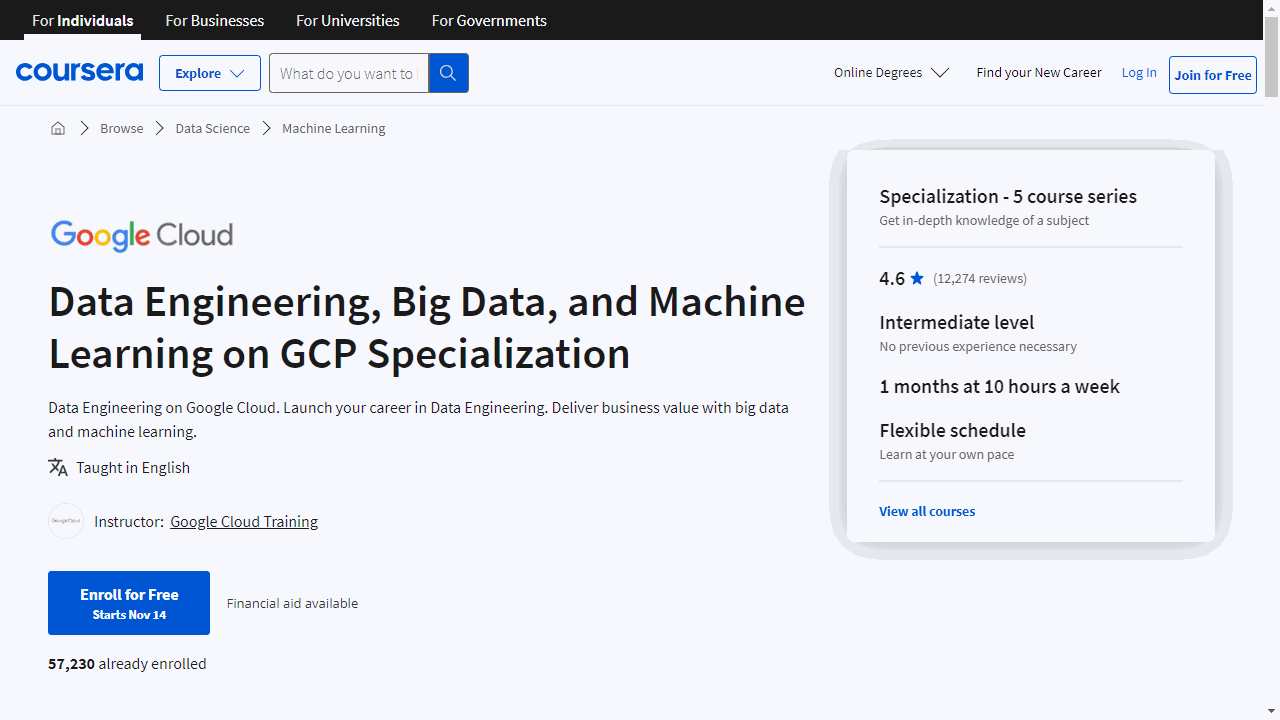

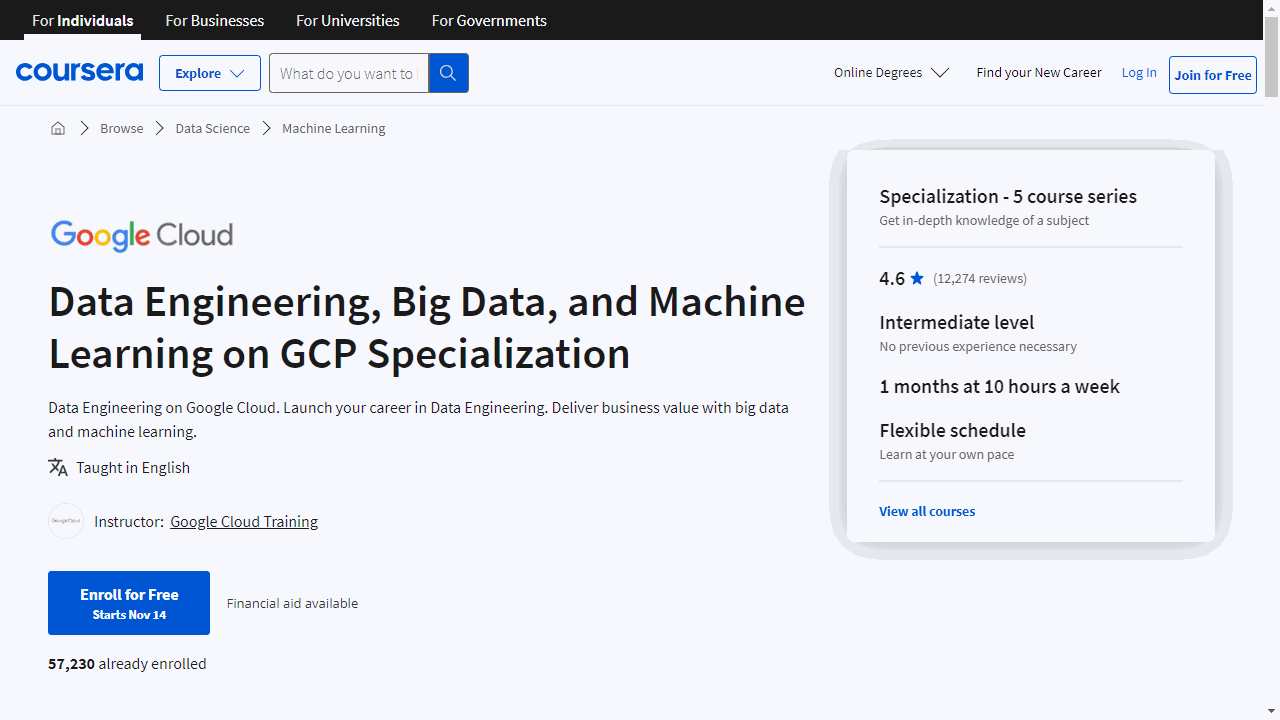

For the best big data course overall on Coursera, we recommend the Data Engineering, Big Data, and Machine Learning on GCP Specialization.

This specialization is designed to give you a practical understanding of data engineering on the Google Cloud Platform, a skill in high demand.

It covers a wide range of topics, from fundamental concepts to advanced techniques, and includes hands-on projects to solidify your understanding.

This is just one of many great big data courses on Coursera.

Keep reading to explore our recommendations for different learning levels and career goals, as well as courses focused on specific big data technologies and tools.

Data Engineering, Big Data, and Machine Learning on GCP Specialization

This series of courses is designed to give you a practical understanding of data engineering on the Google Cloud Platform, a skill in high demand.

The specialization begins with “Google Cloud Big Data and Machine Learning Fundamentals,” which lays the foundation for your journey.

You’ll learn about the data-to-AI lifecycle and how to leverage Google Cloud’s big data and machine learning services.

The course equips you with knowledge on tools like TensorFlow and BigQuery, and you’ll learn to design data pipelines using Vertex AI.

Moving on, “Modernizing Data Lakes and Data Warehouses with Google Cloud” delves into the storage solutions that underpin all data analysis.

Understanding the distinction between data lakes and warehouses is crucial, and this course explains their use-cases and the advantages of cloud-based data engineering.

For those interested in the mechanics of data processing, “Building Batch Data Pipelines on Google Cloud” offers a deep dive into the methodologies of data loading and transformation.

You’ll gain experience with Google Cloud’s suite of data transformation tools, including Dataproc and Dataflow, and learn to manage pipelines effectively.

The specialization also includes “Building Resilient Streaming Analytics Systems on Google Cloud,” which focuses on the real-time aspect of data processing.

You’ll explore how to handle streaming data with Pub/Sub and how to process it for immediate insights using Dataflow, storing the results in BigQuery or Cloud Bigtable.

Lastly, “Smart Analytics, Machine Learning, and AI on Google Cloud” introduces you to the integration of machine learning into data pipelines.

Whether you’re looking to use AutoML for straightforward applications or delve into custom machine learning models with Notebooks and BigQuery ML, this course covers the spectrum of tools available on GCP.

Each course in the specialization provides hands-on experience through Qwiklabs, allowing you to apply what you’ve learned in a controlled environment.

This practical approach is invaluable, ensuring that you’re not just passively absorbing information but actively engaging with the material.

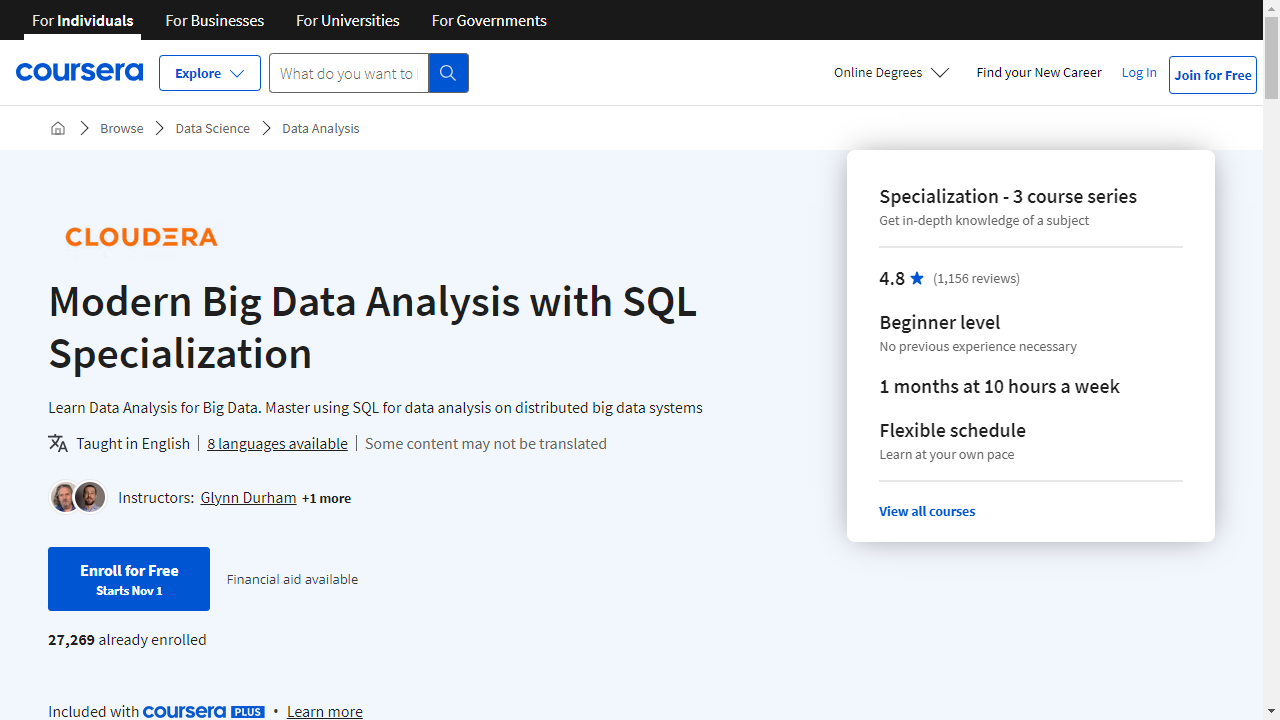

Modern Big Data Analysis with SQL Specialization

This specialization is a comprehensive suite of courses that will equip you with the skills and knowledge to navigate the world of large-scale datasets using SQL.

SQL is an essential skill for data science, and this specialization will give you a solid foundation in the language.

The first course, Foundations for Big Data Analysis with SQL, will teach you to distinguish between operational and analytic databases, understand how database and table design provide structures for working with data, and appreciate how the volume and variety of data affect your choice of an appropriate database system.

Next up is Analyzing Big Data with SQL.

This course dives deeper into the SQL SELECT statement and its main clauses. It focuses on big data SQL engines Apache Hive and Apache Impala, but the information is also applicable to SQL with traditional RDBMs.

You’ll learn to explore and navigate databases and tables, understand the basics of SELECT statements, and work with sorting and limiting results.

The final course, Managing Big Data in Clusters and Cloud Storage, teaches you how to manage big datasets, load them into clusters and cloud storage, and apply structure to the data for querying using distributed SQL engines like Apache Hive and Apache Impala.

You’ll learn to use different tools to browse existing databases and tables in big data systems, explore files in distributed filesystems and cloud storage, and create and manage databases and tables.

One standout feature of these courses is the learning environment.

You’ll get to download and install a virtual machine and the software to run it, which simulates the practical, real-world experience in industry. This is a unique aspect that sets these courses apart from many others.

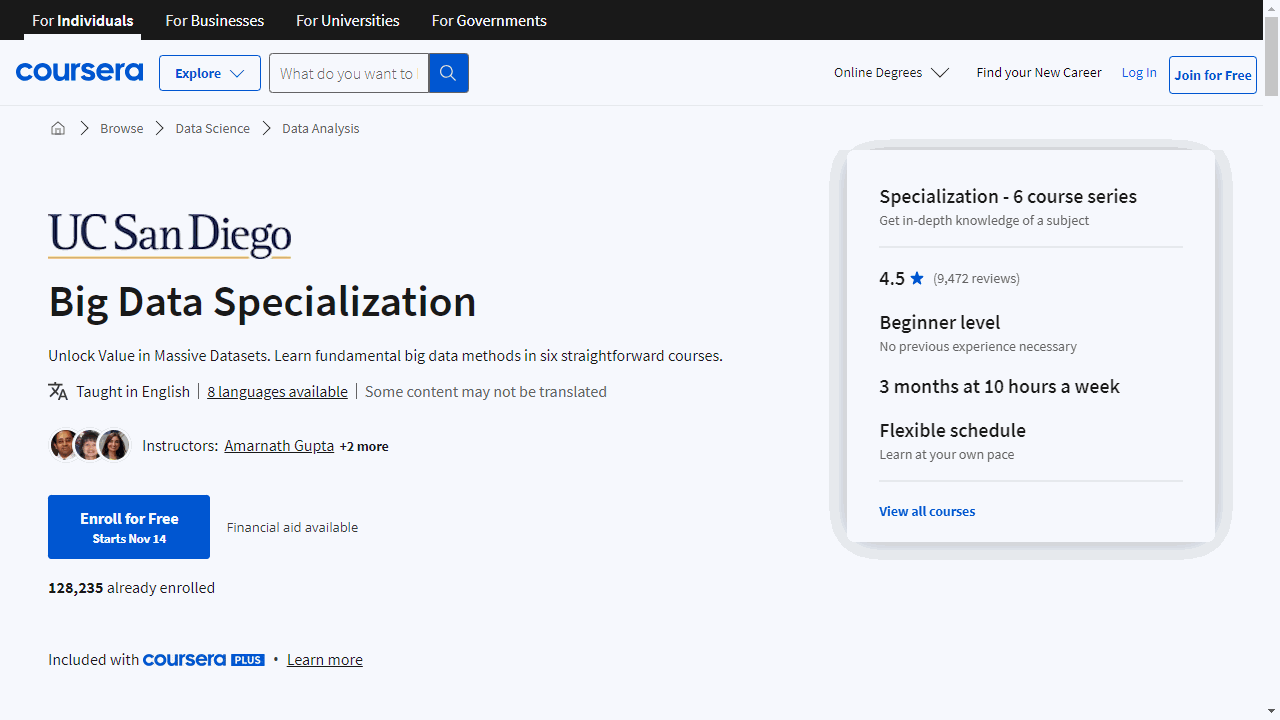

Big Data Specialization

Start with “Introduction to Big Data.” It’s here that you’ll get to grips with the essentials of the big data landscape, learning about Hadoop and the principles that make data analysis possible.

The course breaks down complex concepts into digestible chunks, ensuring you can discuss big data with confidence and apply your insights practically.

As you progress to “Big Data Modeling and Management Systems,” you’ll delve into the nitty-gritty of data organization.

This course demystifies how to collect and store data, offering a clear view of various management systems.

You’ll get hands-on experience with tools like Neo4j and SparkSQL, empowering you to structure your data effectively.

In “Big Data Integration and Processing,” the focus shifts to the transformation of raw data into actionable insights.

You’ll learn to navigate databases and big data systems, integrating and processing data using Hadoop and Spark.

This course is about connecting the dots, ensuring you can piece together disparate data sources into a coherent whole.

“Machine Learning With Big Data” is where prediction meets practice.

This course introduces you to machine learning techniques that allow you to create models that learn from data.

By using open-source tools, you’ll be able to tackle larger datasets and develop scalable solutions.

For those intrigued by the interconnectedness of data, “Graph Analytics for Big Data” offers a fascinating exploration of graph-structured data.

You’ll learn to model complex relationships and analyze them to reveal deep insights.

This course is particularly useful for understanding social networks, organizational structures, and other interconnected systems.

The “Big Data - Capstone Project” is the culmination of your learning journey.

Here, you’ll apply everything you’ve learned to build a comprehensive big data ecosystem.

This project-based course simulates real-world data analysis and gives you the opportunity to create compelling reports, potentially catching the attention of industry leaders.

It’s a great offering by UC San Diego, and it’s sure to be a highlight of your learning experience.

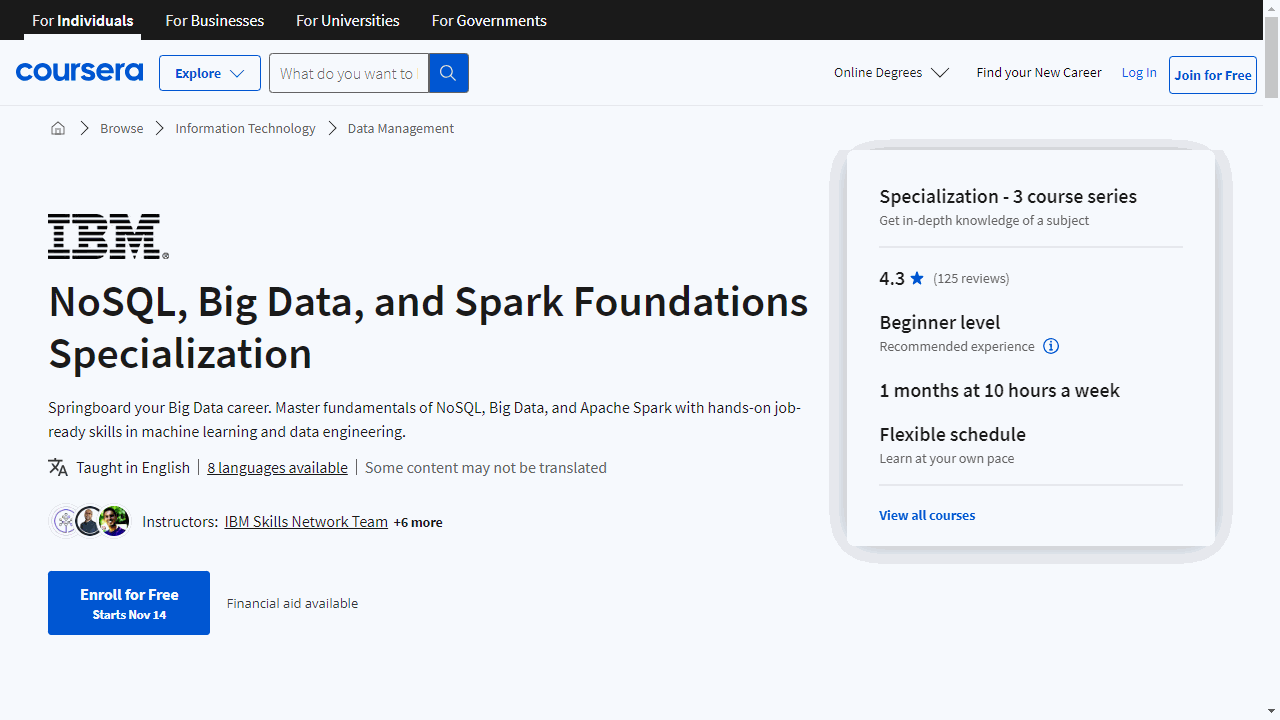

NoSQL, Big Data, and Spark Foundations Specialization

This specialization, offered by IBM, begins with “Introduction to NoSQL Databases,” a course that lays the foundation for understanding NoSQL technology.

You’ll explore the evolution of databases and learn about the distinct categories of NoSQL databases, each with its own strengths.

The course emphasizes practical skills, such as performing CRUD operations in MongoDB and managing data in Cassandra, ensuring that you can apply your learning to real-world scenarios.

Moving on to “Introduction to Big Data with Spark and Hadoop,” you’ll delve into the characteristics of big data and the tools used to process it.

This course demystifies the Hadoop ecosystem, introducing you to components like HDFS and MapReduce, and explains how Hive can simplify working with large datasets.

Apache Spark is also covered, giving you insight into its architecture and how it can be used to handle big data more efficiently.

The inclusion of hands-on labs using contemporary tools like Docker and Jupyter Notebooks helps solidify your understanding.

The specialization culminates with “Machine Learning with Apache Spark,” where you’ll bridge the gap between big data processing and machine learning.

This course provides a clear explanation of machine learning concepts and demonstrates how Apache Spark can be leveraged to build predictive models.

Through practical exercises, you’ll gain experience with SparkML, learning to create and evaluate machine learning models.

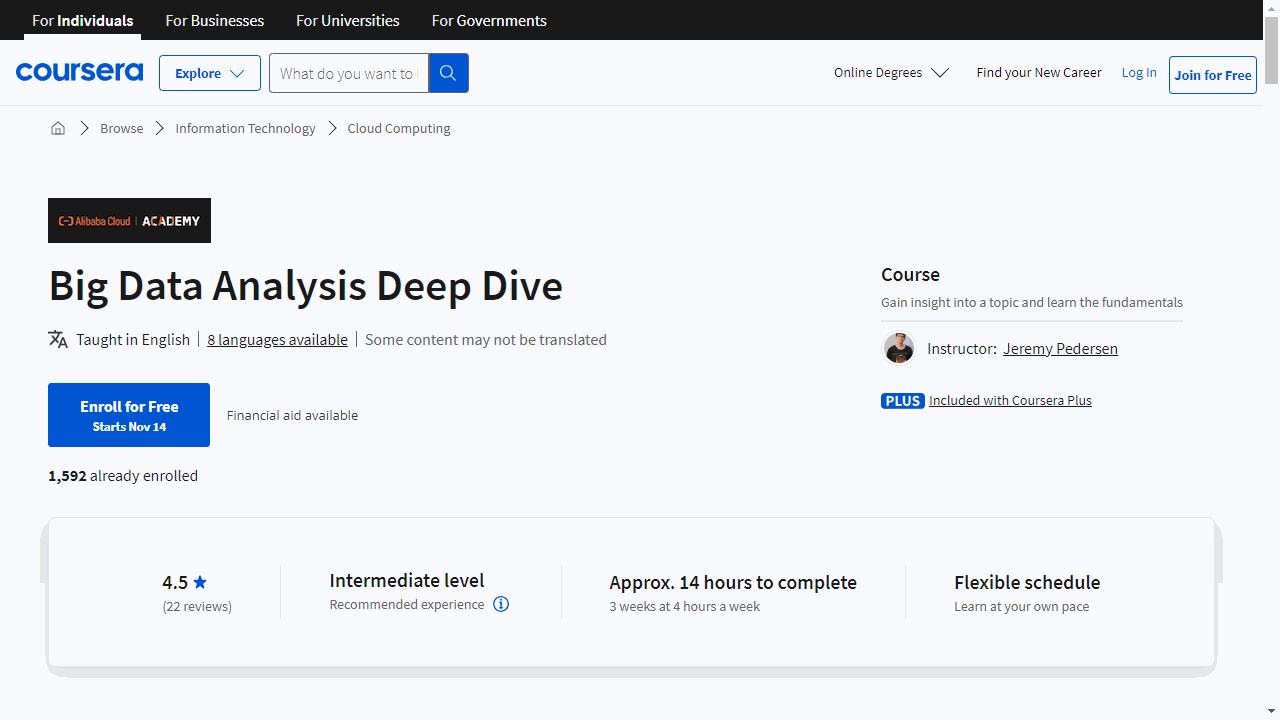

Big Data Analysis Deep Dive

This course begins with an introduction to Python Pandas, tailored for real-world business scenarios.

You’ll learn to navigate the complexities of data types and tackle the challenges of raw, unprocessed data.

The focus on data scrubbing ensures that you’ll be able to clean and prepare data for analysis effectively.

As you progress, the course delves into SQL.

You’ll start with the basics, such as SELECT statements, and gradually build up to more advanced concepts, including table joins and troubleshooting.

This structured approach helps you develop a solid foundation before tackling complex queries.

Spark is another highlight of the curriculum.

You’ll explore Spark RDDs and Dataframes, gaining insights into data analysis and performance optimization.

The course also introduces Spark Streaming, a valuable skill for processing real-time data.

Integration is key in big data, and this course covers it thoroughly.

You’ll learn to use tools like MaxCompute and DataWorks, practicing data movement and gaining familiarity with the processes that keep big data ecosystems running smoothly.

Data visualization is an essential part of the course.

You’ll get hands-on experience with Python visualization tools, including Matplotlib, Pandas, and Seaborn.

The course also introduces Alibaba Cloud DataV, enabling you to create engaging and informative data dashboards.

The course is practical, with numerous demos that reinforce the concepts you learn.

By engaging with these hands-on examples, you’ll solidify your understanding and gain practical experience that can be applied in real-world situations.

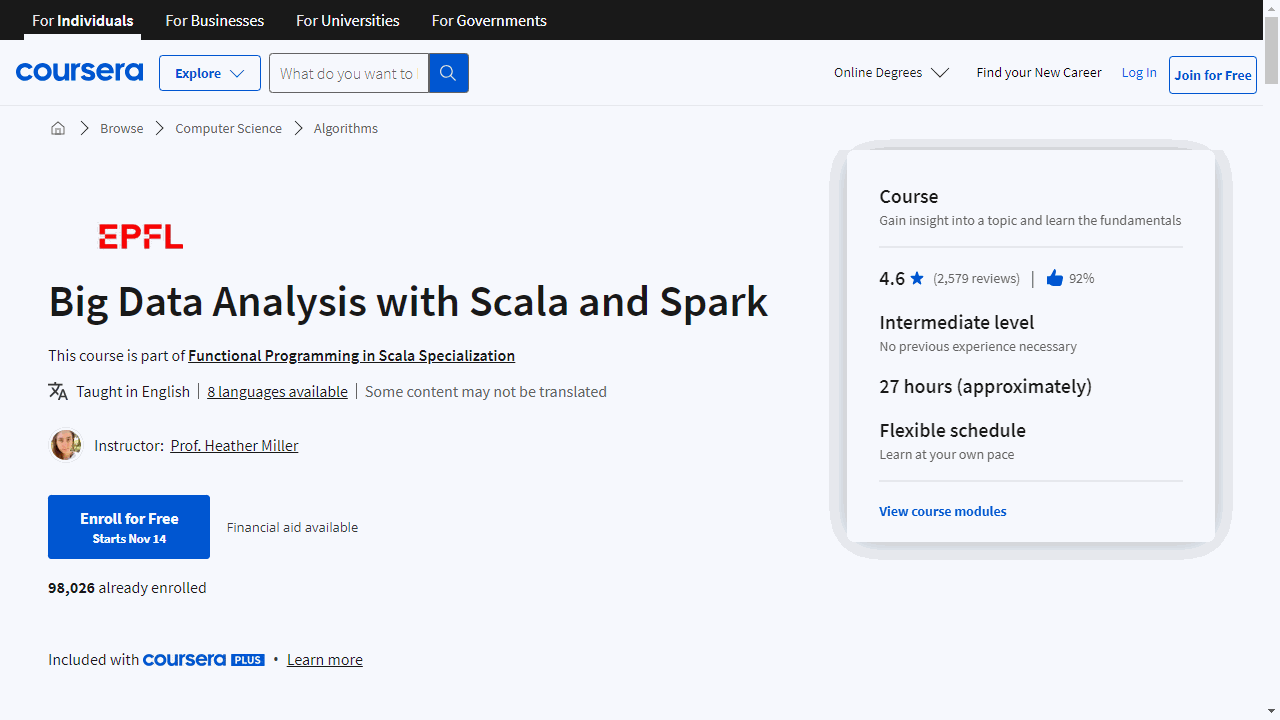

Big Data Analysis with Scala and Spark

Starting with an introduction that sets the stage for what’s to come, you’ll quickly move into understanding the shift from data-parallel to distributed data-parallel processing.

This is crucial for working with big data, as it allows you to spread tasks across multiple machines.

Latency can be a big hurdle in data processing, but this course will show you how to tackle it head-on, ensuring that your data analysis is as efficient as possible.

At the core of Spark are RDDs, and you’ll gain a thorough understanding of these.

You’ll learn how to perform transformations and actions, which are fundamental operations in Spark.

Unlike working with standard Scala collections, Spark’s evaluation requires a different approach, and you’ll discover why and how this impacts your data analysis.

Understanding cluster topology is also essential, and you’ll learn why it’s important for optimizing your data processing tasks.

The course will guide you through reduction operations and pair RDDs, giving you the ability to manipulate complex datasets with ease.

Shuffling data and partitioning are topics that often confuse beginners, but they won’t confuse you.

You’ll learn what shuffling is, why it’s necessary, and how to use partitioners to optimize your data processing.

The course also demystifies the difference between wide and narrow dependencies, helping you become better at programming efficient Spark code.

You’ll also explore the handling of both structured and unstructured data, preparing you for any data scenario.

Spark SQL and DataFrames are covered extensively, with two dedicated sessions for DataFrames alone.

This ensures you’ll be comfortable with these powerful tools, enabling you to query and analyze data with the precision of a seasoned data analyst.

Finally, you’ll be introduced to Datasets, Spark’s newest feature, rounding out your skill set and ensuring you’re up to date with the latest in big data processing.