Binary classification is a type of machine learning task where the output is a binary outcome, i.e., it belongs to one out of two classes.

For example, an email can be classified as either ‘spam’ or ’not spam’, or a tumor can be ‘malignant’ or ‘benign’.

When you have more than two classes, it’s called multiclass classification.

We can use various algorithms to classify the data points.

These algorithms include logistic regression, decision trees, random forest, support vector machines, and gradient boosting algorithms like XGBoost.

XGBoost, short for eXtreme Gradient Boosting, is a popular machine learning algorithm in the field of structured or tabular data.

It’s a decision-tree-based ensemble machine learning that uses a gradient boosting framework.

In the next sections, we’ll dive into how to use XGBoost for binary classification in Python.

We’ll use the Red Wine dataset. It contains 11 features that describe the chemical properties of different wines and a quality score for each.

Let’s get started!

Installing XGBoost In Python

Before we can start using XGBoost, we need to install it.

XGBoost can be installed using pip, which is a package manager for Python.

To install it, you can use the following command in your terminal:

pip install xgboost

If you are using a Jupyter notebook, you can run this command in a code cell by prefixing it with an exclamation mark:

!pip install xgboost

You can do it using conda and mamba too:

conda install -c conda-forge xgboost

mamba install -c conda-forge xgboost

After running this command, XGBoost should be installed and ready to use.

You can check if it’s installed correctly by importing it in your Python script:

import xgboost as xgb

If this command runs without any errors, congratulations! You have successfully installed XGBoost.

Now, let’s move on to the next section where we will discuss the loss functions for binary classification.

Objective (Loss) Functions For Binary Classification

In XGBoost, the objective function, also known as the loss function, is used to measure the difference between the predicted and actual outcomes.

It guides the model on the path it has to take during training to reach the optimal solution.

Sometimes it’s the same as the evaluation metric, but it doesn’t have to be.

For binary classification, XGBoost provides several objective functions:

reg:logistic and binary:logistic

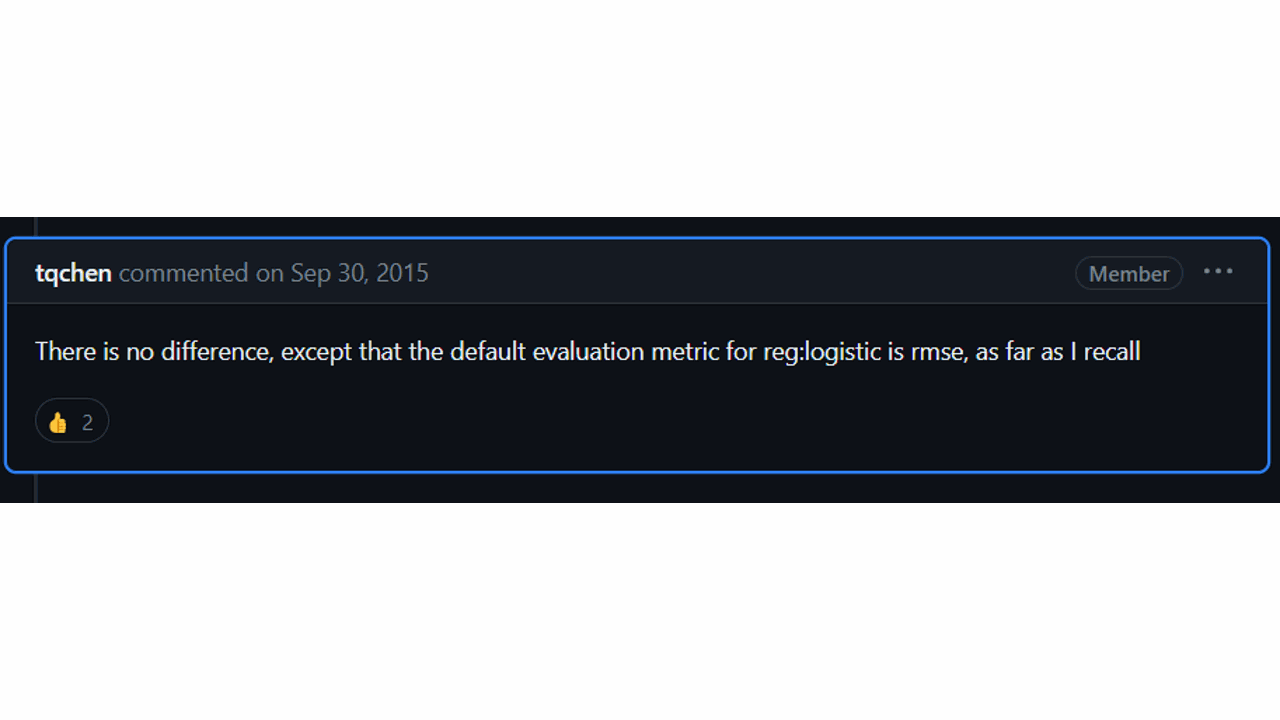

You may get confused at first because there are two objective functions with very similar names: reg:logistic and binary:logistic.

According to the library creator they are the same under the hood.

The only difference you may notice is that, if you use XGBoost’s native API to train your model, the default evaluation metric will be RMSE for reg:logistic and Binary Accuracy for binary:logistic.

binary:logitraw

To get probabilities from raw scores, we usually pass it through a sigmoid function, which outputs a value between 0 and 1.

This function also performs logistic regression but returns the raw untransformed output, before the sigmoid function is applied.

binary:hinge

This function implements the hinge loss for binary classification.

The hinge loss is used for “maximum-margin” classification, most notably for support vector machines (SVMs).

It can offer better generalization than the logistic loss in some cases, but the downside is that it can only output 0 or 1 instead of probabilities.

To output probabilities, you would need to calibrate the model using a method like Platt scaling.

Experiment with different functions to see which one works best for your specific use case.

In the next section, we’ll load our data and start training our XGBoost model.

Loading The Data

To load the data, we’ll use the pandas library, which is a powerful data handling library in Python.

If you don’t have it installed, you can do so using pip:

pip install pandas

Now, let’s load the Red Wine dataset.

The dataset is available as a CSV file on Kaggle.

We’ll use the pandas function read_csv() to load the data into a DataFrame.

import pandas as pd

# Load the data

data = pd.read_csv('winequality-red.csv')

# Display the first few rows of the data

data.head()

| fixed acidity | volatile acidity | citric acid | residual sugar | chlorides | free sulfur dioxide | total sulfur dioxide | density | pH | sulphates | alcohol | quality |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 7.4 | 0.7 | 0 | 1.9 | 0.076 | 11 | 34 | 0.9978 | 3.51 | 0.56 | 9.4 | 5 |

| 7.8 | 0.88 | 0 | 2.6 | 0.098 | 25 | 67 | 0.9968 | 3.2 | 0.68 | 9.8 | 5 |

| 7.8 | 0.76 | 0.04 | 2.3 | 0.092 | 15 | 54 | 0.997 | 3.26 | 0.65 | 9.8 | 5 |

| 11.2 | 0.28 | 0.56 | 1.9 | 0.075 | 17 | 60 | 0.998 | 3.16 | 0.58 | 9.8 | 6 |

| 7.4 | 0.7 | 0 | 1.9 | 0.076 | 11 | 34 | 0.9978 | 3.51 | 0.56 | 9.4 | 5 |

The head() function is used to get the first n rows. By default, it returns the first 5 rows of the DataFrame.

Now that we have loaded our data, we can move on to training our XGBoost model.

Training XGBoost With The Scikit-Learn API

XGBoost integrates smoothly with the Scikit-Learn library, which provides a consistent API for many different machine learning algorithms in Python.

Before we start training, we need to prepare our data.

We’ll separate our target variable (quality) from the rest of the dataset and split the data into training and test sets.

The original quality column contains values from 3 to 8, so we need to convert it to a binary variable.

Let’s consider every wine with a quality score of 7 or higher as ‘good’ (1) and the rest as ’not good’ (0).

from sklearn.model_selection import train_test_split

# Separate target variable

X = data.drop('quality', axis=1)

y = data['quality'].map(lambda x: 1 if x >= 7 else 0)

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Now, let’s train our XGBoost model.

We’ll use the XGBClassifier class from the XGBoost library which, as the name suggests, is used to create an XGBoost classifier.

from xgboost import XGBClassifier

# Create an instance of the XGBClassifier

xgb_classifier = XGBClassifier(objective='binary:logistic')

# Fit the model to the training data

xgb_classifier.fit(X_train, y_train)

If you have used Scikit-Learn before, this should look very familiar.

The fit() function trains the model on the training data.

We pass the features (X_train) and the target (y_train) as parameters to this function.

There are many hyperparameters you can tune to improve the performance of your model, like the number of trees, the maximum depth of the trees, the learning rate, and many others.

In this example, we used the default hyperparameters.

Now that our model is trained, we can use it to make predictions.

Making Predictions

There are two ways to make predictions using XGBoost that you should be aware of:

Class Predictions

If you want to predict the class of an instance, directly, with a threshold of 0.5, you can use the predict() function.

# Make class predictions

y_pred = xgb_classifier.predict(X_test)

array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, ...]

In this case, every instance with a probability greater than 0.5 will be classified as ‘good’ (1) and the rest as ’not good’ (0).

Predicting Probabilities

Most of the time, we want to know the probabilities that an instance belongs to each class, so we can use a different threshold if we want or use the probabilities directly downstream.

Let’s say we are predicting if a stock will go up or down.

A portfolio manager might plug these predictions into an expected value formula to decide whether to buy or sell the stock.

The predict_proba() function returns the probabilities for each class.

# Predict probabilities

y_pred_proba = xgb_classifier.predict_proba(X_test)

array([[9.98416662e-01, 1.58336537e-03],

[9.99976218e-01, 2.38024204e-05],

[9.98861015e-01, 1.13899994e-03],

[9.99696195e-01, 3.03776585e-04],

...]

The left column is the probability that the instance belongs to the negative class (0) and the right column is the probability that it belongs to the positive class (1).

In the next section, we’ll evaluate the performance of our model.

This will give us an idea of how well our model is doing.

Evaluating XGBoost Model Performance

Evaluating the performance of a model is a crucial step in machine learning.

It helps us understand how well our model is doing and where it can be improved.

Let’s look at some of the ways we can do it.

score function

This is the simplest way to get an evaluation of your model over a dataset.

In XGBoost, it returns the mean accuracy on the given test data and labels.

# Calculate accuracy

accuracy = xgb_classifier.score(X_test, y_test)

print("Accuracy: %.2f%%" % (accuracy * 100.0))

Using Scikit-learn Evaluation Metrics

Scikit-learn provides several functions to calculate metrics such as precision, recall, F1 score, ROC AUC and Log Loss.

To use functions like Log Loss and ROC AUC, we need to get the probabilities for each class.

from sklearn.metrics import log_loss, roc_auc_score

# Calculate log loss

log_loss(y_test, y_pred_proba)

# Calculate ROC AUC

roc_auc_score(y_test, y_pred_proba[:,1])

Notice that, in the second line, we used y_pred_proba[:,1] instead of y_pred_proba, as we only need the probabilities for the positive class.

It makes no difference for Log Loss.

The classification_report() function prints the precision, recall, F1-score and support for each class.

from sklearn.metrics import classification_report

# Print classification report

print(classification_report(y_test, y_pred))

Remember, no single metric can tell the whole story.

It’s important to look at multiple metrics and understand what they mean in the context of your specific problem.

XGBoost Important (Hyper) Parameters

XGBoost has several important hyperparameters (sometimes called just parameters in the documentation) that can significantly impact the performance of your model.

Tuning these parameters is crucial to get the most out of XGBoost.

Here are some of the key hyperparameters you should consider:

learning_rate

The learning_rate parameter, also known as the step size shrinkage, controls the pace at which the model learns.

A smaller learning rate can prevent overfitting, but it may also require more iterations to converge.

A larger learning rate can speed up convergence, but it may also cause the model to miss the optimal solution.

max_depth

The max_depth parameter represents the maximum depth of each decision tree in the ensemble.

A higher value allows the model to learn more complex patterns in the data, but it can also lead to overfitting.

Conversely, a lower value can prevent overfitting but may result in underfitting.

subsample

The subsample parameter represents the fraction of samples used for each decision tree.

By using a subset of the training data for each tree, XGBoost introduces randomness, which can help reduce overfitting and improve generalization.

Keep in mind that here we do sampling without replacement, which is different from the usual bootstrapping in Random Forest.

colsample_bytree

The colsample_bytree parameter represents the fraction of features used for each decision tree.

Similar to subsample, it introduces randomness by using a subset of features for each tree, which can help prevent overfitting and improve generalization.

There is also colsample_bylevel and colsample_bynode for sampling columns at different points of the tree-building process.

n_estimators

The n_estimators parameter is a required parameter that determines the number of boosting iterations and controls the overall complexity of the model.

A higher number of estimators, with a small learning rate, generally leads to better performance, but it can also increase the risk of overfitting and computational cost.

And that’s it!

You’ve now learned how to use XGBoost for binary classification in Python.

Happy coding!