I got very excited about a new technique in natural language processing (NLP) called Contrastive Search.

It is a powerful tool that improves the quality of text generated by smaller language models.

In this blog post, I will compare the outputs of contrastive search in a few open-source models with GPT-3.

Finally, I will talk about the implications of this technique and how it could be used in the future. So, let’s get started!

What is Contrastive Search?

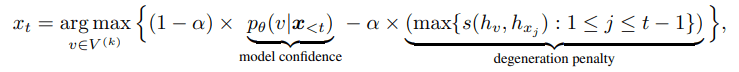

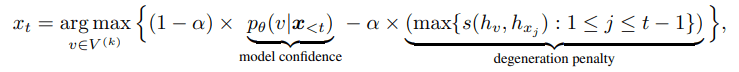

Contrastive search is an innovative method of sampling words from a generative model that encourages the model to be less “greedy” and create more coherent, meaningful sentences.

Instead of choosing the most likely next word based on the currently predicted words, the contrastive search takes into account the previously predicted words and applies a “degeneration penalty” to keep the model in check.

This allows the model to generate more realistic sentences.

As demonstrated in the paper, this method yields more successful results than simply relying on a greedy search.

This can be extremely useful when you can’t fit a giant model in your GPUs.

Smaller language models typically have a smaller capacity, which can lead to oversimplified predictions.

By using contrastive search, the model can be encouraged to think more broadly and create more meaningful sentences.

The drawback is that it takes longer to generate completions.

Experiments

I rented a big g4dn.metal machine on AWS to try a few open-source text generation models and compare them to GPT-3.

My goal was to see if a smaller model could generate text with similar quality of GPT-3 if the generation used a different sampling technique.

I tried it with two prompts:

Prompt 1: “Can a dog eat chocolate?”

I recently listened to a podcast about content marketing where the interviewee said he asked new writers to write a 150-word article answering the question “Can dogs eat chocolate?”.

This is a simple question, that can be answered with very little research. With this, he evaluated if the writer went straight to the point or added too much fluff to the article.

As a prompt, it has no instructions, which may harm the results, but being a very simple question that is extremely likely to be in the training set of the models, I thought it would be a good test.

Prompt 2: “Write a comprehensive, detailed, in-depth, clear answer to the following question: What could be the reason why a neural network predicts only one class for a multiclass problem, even though it has many classes in its training data set?”

I once saw this question on Quora and played with GPT-3 to answer it. It has 49 views.

GPT-3 (davinci-002) didn’t give the full answer in a single attempt.

Anyway, as it’s a niche question, much harder than knowing if dogs can eat chocolate, I wanted to try and see how close the models could get to a good answer.

I used HuggingFace to run all the models and the completions are italicized.

OPT-30B

OPT-30B is one of eight large language models trained and open-sourced by Meta AI.

The models were trained using the AdamW optimizer with a linear learning rate schedule and dropout.

The pre-training corpus consisted of a concatenation of datasets from RoBERTa, the Pile, and PushShift.io Reddit, and was tokenized using the GPT-2 byte level BPE tokenizer.

model_name = r'facebook/opt-30b'

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = OPTForCausalLM.from_pretrained(model_name)

token_input = tokenizer(prompt, return_tensors='pt')

input_ids = token_input['input_ids'].cuda()

attention_mask = token_input['attention_mask'].cuda()

pad_token_id = tokenizer.eos_token_id

output = model.generate(input_ids, attention_mask=attention_mask, pad_token_id=pad_token_id, max_length=200, penalty_alpha=0.6, top_k=4)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(output[0], skip_special_tokens=True))

print("" + 100 * '-')

Can dogs eat chocolate?

Chocolate contains caffeine, which is toxic to dogs. The amount of caffeine in chocolate is enough to cause vomiting, tremors, restlessness, hyperactivity, increased heart rate (tachycardia), seizures, and in severe cases, death. So how much chocolate is too much? According to Pet Poison Helpline, a dog would need to eat at least 1.5 ounces of chocolate to be at risk for toxicity. That’s about the size of a Hershey’s Kiss. If your dog eats chocolate, call your veterinarian immediately. The American Kennel Club recommends taking your dog to the vet within 30 minutes of ingesting chocolate. What should you do if your dog eats chocolate? Pet Poison Helpline recommends the following steps to prevent chocolate poisoning: Keep chocolate out of reach of pets. Do not give chocolate to pets under any circumstances. If you suspect your pet has eaten chocolate, call your veterinarian immediately

I don’t know about the accuracy of the answer (beyond the fact that dogs can’t eat chocolate), but I like it.

Write a comprehensive, detailed, in-depth, clear answer to the following question: What could be the reason why a neural network predicts only one class for a multiclass problem, even though it has many classes in its training data set?\nANSWER:

The problem is that the network is overfitting to the training data.

As I expected, the answer to this specific niche question is bad.

GPT-NeoX-20B

GPT-NeoX-20B is a large language model trained and open-sourced by EleutherAI that largely follows the architecture of GPT-3.

It uses rotary embeddings instead of learned positional embeddings (like GPT).

They used the Pile dataset to train.

Can dogs eat chocolate?

Dogs can eat chocolate, but it’s not recommended.

Chocolate contains theobromine, which is a stimulant that can cause vomiting, diarrhea, restlessness, panting, hyperactivity, tremors, seizures, and even death.

Theobromine is found in all chocolate, but the darker the chocolate, the more theobromine it contains.

If your dog eats chocolate, call your veterinarian immediately.

How do I know if my dog has eaten chocolate?

If your dog has eaten chocolate, you may notice vomiting, diarrhea, restlessness, panting, hyperactivity, tremors, seizures, or even death.

What should I do if my dog has eaten chocolate?

If your dog has eaten chocolate, call your veterinarian immediately.

How can I prevent my dog from eating chocolate?

Keep your dog away from chocolate.

Just like OPT, Contrastive Search gives a higher quality completion, although it takes much longer to generate than Top K.

It could be used as the starting point for writing an article.

Write a comprehensive, detailed, in-depth, clear answer to the following question: What could be the reason why a neural network predicts only one class for a multiclass problem, even though it has many classes in its training data set?\nANSWER:

The neural network is overfitting the training data. The neural network is underfitting the training data. The neural network is not learning the training data. The neural network is not able to learn the training data.

A:

I think the answer is:

The neural network is overfitting the training data.

In other words, the neural network is learning the training data too well. This is a common problem with neural networks. You can try to fix it by adding regularization to the cost function, or by using a different activation function.

Nonsense answers.

OPT-66B

The same model as OPT-30B, but trained with 66 billion parameters.

For this model, I had to limit the output to 100 tokens because 200 blew up the memory of the GPUs.

Can dogs eat chocolate?

Dogs can eat chocolate, but it’s not good for them. (Photo: kzenon, Getty Images/iStockphoto) Chocolate is a treat for humans, but not for dogs. Theobromine, a stimulant found in cacao, can cause vomiting, diarrhea, hyperactivity in dogs and, in extreme cases, death. The American Kennel Club recommends keeping chocolate away from dogs, but there are times when you might

I like the answer from the smaller OPT model better, but it’s not completely comparable as I had to limit the output for this model.

Write a comprehensive, detailed, in-depth, clear answer to the following question: What could be the reason why a neural network predicts only one class for a multiclass problem, even though it has many classes in its training data set?\nANSWER:

The problem is that there is no multiclass problem in the training data set. Explanation: A neural network is a set of layers that process data in a hierarchical manner. Each layer in the network is responsible for a

Wrong, nonsense answer.

FLAN-T5-XXL

Flan-T5 is a large language model trained and open-sourced by Google.

It uses a version of T5 fine-tuned to follow instructions to solve thousands of other tasks like summarization, translation and sentiment analysis.

Can dogs eat chocolate?

No

Very brief, but the correct answer.

If we expand the prompt to “Answer the question: Can dogs eat chocolate?” it generates:

no

Now for the neural network question:

Write a comprehensive, detailed, in-depth, clear answer to the following question: What could be the reason why a neural network predicts only one class for a multiclass problem, even though it has many classes in its training data set?\nANSWER:

The neural network is a generalization error.

Terrible.

So let’s compare the answers that multiple versions of GPT-3: davinci-002, davinci-003 and ChatGPT generate for these prompts.

GPT-3 davinci-002

GPT-3 is a large-scale language model developed by OpenAI.

The “davinci-002” part of the name refers to the specific version of the model that was improved over the original davinci model.

Can dogs eat chocolate?

No, dogs should not eat chocolate.

Brief but correct answer. With a better prompt and adjusting parameters we should get a more complete answer closer to what OPT/GPT-NeoX got with Contrastive.

Write a comprehensive, detailed, in-depth, clear answer to the following question: What could be the reason why a neural network predicts only one class for a multiclass problem, even though it has many classes in its training data set?\nANSWER:

There could be several reasons why a neural network predicts only one class for a multiclass problem, even though it has many classes in its training data set. One possibility is that the neural network was not trained properly and is not able to learn the complex relationships between the input features and the output class labels. Another possibility is that the neural network is overfitting on the training data and is not able to generalize to new data. Finally, it could be that the data set itself is too small or too simple to allow the neural network to learn the necessary relationships.

This is the best answer compared to the other language models, although still not what I would consider satisfactory.

Still, it seems that we need bigger models like GPT-3 to have knowledge about more niche areas like neural networks.

GPT-3 davinci-003

This is the newest model by OpenAI.

It’s the most significant improvement that I have seen since I started using the API.

One of the improvements is the “context window” which allows the model to take longer prompts into account when generating text.

And it seems to have been fine-tuned with reinforcement learning to generate more coherent text.

Can dogs eat chocolate?

No, dogs should not eat chocolate. Chocolate contains a compound called theobromine, which is toxic to dogs and can cause vomiting, diarrhea, increased thirst, increased urination, hyperactivity, abnormal heart rhythm, and even seizures.

Write a comprehensive, detailed, in-depth, clear answer to the following question: What could be the reason why a neural network predicts only one class for a multiclass problem, even though it has many classes in its training data set?\nANSWER:

There could be several reasons why a neural network may predict only one class for a multiclass problem.

Firstly, if the training dataset is not balanced, i.e., it contains many more instances of one class than the other classes, then the neural network may be biased towards that one class.

Secondly, if the training dataset contains noisy or irrelevant data, then the neural network may become confused and end up predicting only one class.

Thirdly, if the data is not properly preprocessed, then the neural network may be unable to learn the patterns in the data and hence end up predicting only one class.

Finally, if the neural network is not properly trained, either due to insufficient training data or poor hyperparameter settings, then it may not be able to accurately learn the patterns in the data and hence may be limited in its ability to accurately predict multiple classes.

Davinci 003 shows a very good improvement in both answers!

ChatGPT

As I was writing this article, OpenAI released the first version of ChatGPT, so I asked it the same questions:

Can dogs eat chocolate?

No, dogs should not eat chocolate. Chocolate is toxic to dogs and can cause serious health problems, including vomiting, diarrhea, and in severe cases, death. Chocolate contains theobromine and caffeine, which are toxic to dogs and can affect their heart and nervous system. If your dog has eaten chocolate, it is important to contact your veterinarian immediately for advice.

This time I asked the Quora question plainly, without instructions to write a detailed answer.

What could be the reason why a neural network predicts only one class for a multiclass problem, even though it has many classes in its training data set?

There could be several reasons why a neural network might predict only one class for a multiclass problem. Some possible reasons include:

The training data may be imbalanced, with a disproportionate number of examples belonging to a single class. This can cause the model to over-predict that class, as it will be more familiar with it.

The model may be overfitting to the training data, meaning that it has learned to predict the training examples perfectly, but does not generalize well to new examples. This can happen if the model is too complex for the amount of training data, or if the training process is stopped too early.

The model may not have been trained for long enough or with enough data to learn to distinguish between the different classes. Neural networks require a large amount of data and computational power to learn effectively, so it is important to make sure that the training process is properly set up.

The model may not be using the right architecture or hyperparameters for the problem. Different neural network architectures and hyperparameters can have a big impact on the model’s performance, so it is important to choose the right ones for the task at hand.

Overall, there are many possible reasons why a neural network might predict only one class for a multiclass problem. It is important to carefully analyze the data, the training process, and the model itself to identify the cause and find a solution.

No comments. Just 🤯.

GPT-3 Wins

I think it’s pretty clear that Contrastive Search is an improvement on the completions generated by smaller models, but GPT-3 still wins.

It’s a no-brainer if you consider the hassle of setting all the libraries and code to run these models in your own machine.

HuggingFace saves a lot of work, but GPT-3 is a simple API call.

The cost to run GPT-3 largest model is 0.02 USD/1000 tokens, which is much less than what you will spend running GPUs on the cloud.

That said, it can be cost-effective if you have a large GPU laying around unused and need simple completions.

There is the additional benefit that the OpenAI team is always studying and optimizing the models/API to generate cohesive and safe text based on millions of data and client feedback.

ChatGPT is the latest iteration, and it’s mind-blowing!

Now, what if ChatGPT/GPT 3.x used Contrastive Search?

That would be very interesting.

Maybe the improvements we are seeing with ChatGPT are partly a result of better search strategies during text generation.

If you still want to run a smaller model locally, I would go with EleutherAI models and contrastive search.