In general, no.

Decision trees are not sensitive to feature scaling because their splits don’t change with any monotonic transformation.

Normalization is not necessary either, but it can change your results because it’s not monotonic, as we’ll see later.

That said, the numerical implementation of a specific library may make your decision tree predictions change if you don’t scale or normalize your data.

This is usually a very small change, that you don’t need to worry about, but it’s good to know if you find yourself in a situation where you need to explain why your predictions are different.

Other machine learning algorithms based on decision trees, like XGBoost, LightGBM, Random Forests, etc, follow the same rule.

Why Decision Trees Are Not Sensitive To Monotonic Transformations

A monotonic transformation is a transformation that preserves the order of the values.

For example, the rank or square transformation.

Imagine we have the following temperature data:

import numpy as np

data = np.array([2.1, 2.3, 2.5, 2.7, 2.9, 3.1, 3.3, 3.5, 3.7, 3.9])

Imagine that a very simple, single split, decision tree decides that the best split should be at 3.1.

Everything below that belongs to the negative class and everything above belongs to the positive class.

The predictions would be:

predictions = np.array([0, 0, 0, 0, 0, 1, 1, 1, 1, 1])

Now if we apply a square transformation to the original data, we get:

data = data**2

np.array([ 4.41, 5.29, 6.25, 7.29, 8.41, 9.61, 10.89, 12.25, 13.69,

15.21])

The best split would be at 9.61. Although the split is numerically different, the predictions are the same:

predictions = np.array([0, 0, 0, 0, 0, 1, 1, 1, 1, 1])

It would be the same case with a rank transformation.

data = np.argsort(data)

np.array([0, 1, 2, 3, 4, 5, 6, 7, 8, 9])

The best split would be at 5 but, again, the predictions would be the same:

predictions = np.array([0, 0, 0, 0, 0, 1, 1, 1, 1, 1])

Comparing Decision Trees With And Without Feature Scaling

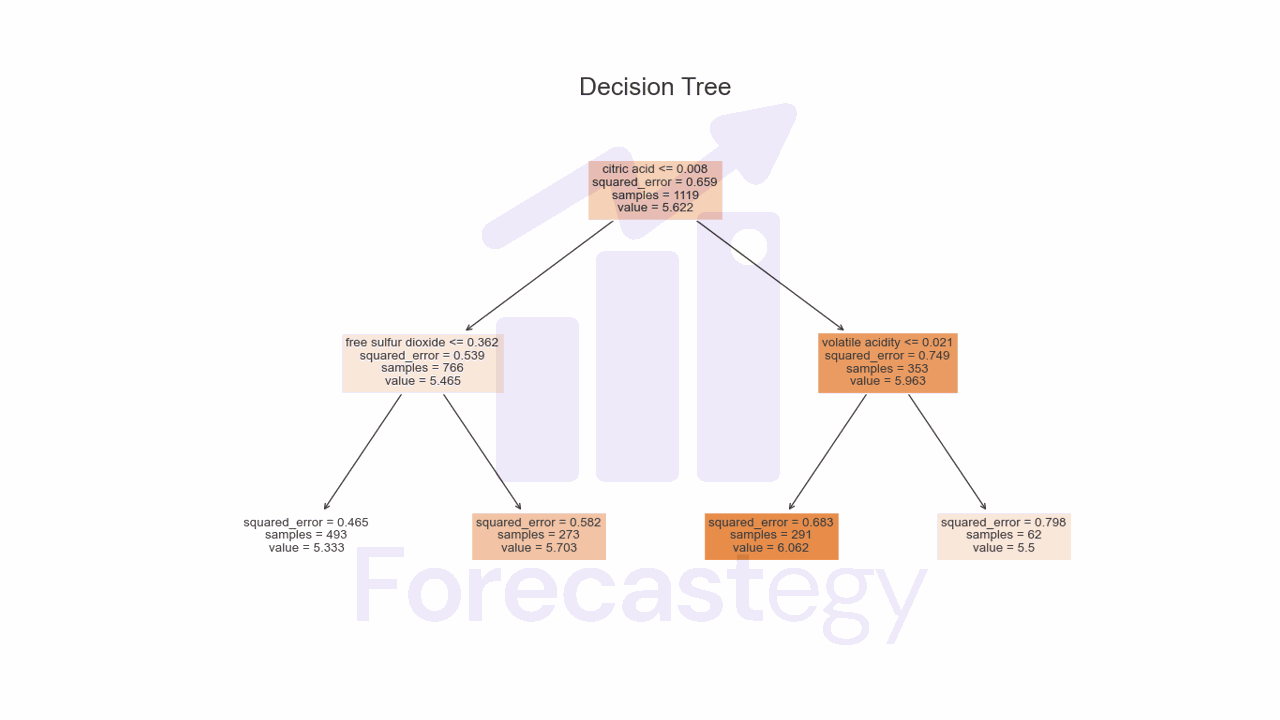

Let’s use the Red Wine Quality dataset to compare the predictions of a decision tree with and without feature scaling.

This dataset contains samples of chemical properties of red wines and their quality.

The idea is to predict the quality of a wine based on its chemical properties.

data = pd.read_csv(os.path.join(path, "winequality-red.csv"))

X = data.drop(columns=["quality"])

y = data["quality"]

X_train, X_val, y_train, y_val = train_test_split(X, y, test_size=0.3, random_state=42)

| fixed acidity | volatile acidity | citric acid | residual sugar | chlorides | free sulfur dioxide | total sulfur dioxide | density | pH | sulphates | alcohol | quality | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 7.4 | 0.7 | 0 | 1.9 | 0.076 | 11 | 34 | 0.9978 | 3.51 | 0.56 | 9.4 | 5 |

| 1 | 7.8 | 0.88 | 0 | 2.6 | 0.098 | 25 | 67 | 0.9968 | 3.2 | 0.68 | 9.8 | 5 |

| 2 | 7.8 | 0.76 | 0.04 | 2.3 | 0.092 | 15 | 54 | 0.997 | 3.26 | 0.65 | 9.8 | 5 |

| 3 | 11.2 | 0.28 | 0.56 | 1.9 | 0.075 | 17 | 60 | 0.998 | 3.16 | 0.58 | 9.8 | 6 |

| 4 | 7.4 | 0.7 | 0 | 1.9 | 0.076 | 11 | 34 | 0.9978 | 3.51 | 0.56 | 9.4 | 5 |

After splitting the data into training and validation sets, we train a decision tree.

We’ll create it using the DecisionTreeRegressor class from scikit-learn.

We’ll set the random_state parameter to 42 to make our results reproducible.

tree = DecisionTreeRegressor(random_state=42)

tree.fit(X_train, y_train)

p = tree.predict(X_val)

p stores the prediction for this tree without feature scaling.

Now we’ll scale the data using the StandardScaler class from scikit-learn.

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_val_scaled = scaler.transform(X_val)

tree = DecisionTreeRegressor(random_state=42)

tree.fit(X_train_scaled, y_train)

p_scaled = tree.predict(X_val_scaled)

The StandardScaler class scales the data by subtracting the mean from each feature and dividing by the standard deviation.

To see if the predictions are different, we can take their averages.

p.mean(), p_scaled.mean()

In this case, the first value is 5.71875 and the second is 5.72083.

Yes, there is a tiny, insignificant difference between the predictions of the two trees because of the way computers handle numbers.

This doesn’t mean you should always scale your data before training a decision tree because there are more important factors to worry about like the quality of the features and the size of the dataset.

Comparing Decision Trees With And Without Normalization

Normalization is a type of feature scaling that makes each sample have a unit norm.

It calculates the norm of each sample and divides each feature by it.

Let’s use scikit-learn’s Normalizer class to check its effect on the predictions of a decision tree.

from sklearn.preprocessing import Normalizer

normalizer = Normalizer()

X_train_norm = normalizer.fit_transform(X_train)

X_val_norm = normalizer.transform(X_val)

tree = DecisionTreeRegressor(random_state=42)

tree.fit(X_train_norm, y_train)

p_norm = tree.predict(X_val_norm)

p_norm.mean()

The mean of the predictions is 5.69375, which is different than any of the previous values.

I would be more careful about this transformation, because it’s not monotonic.

If you compare the ranks of the features before and after normalization, you’ll see that the order is different.

X_train_norm = pd.DataFrame(X_train_norm, columns=X_train.columns, index=X_train.index)

np.allclose(X_train.rank(), X_train_norm.rank())

False

While doing it for the scaled data didn’t change the order of the features:

X_train_scaled = pd.DataFrame(X_train_scaled, columns=X_train.columns, index=X_train.index)

np.allclose(X_train.rank(), X_train_scaled.rank())

True

My advice is to not worry about using feature scaling or normalization for decision trees.

It’s just another step you will have to implement and maintain in production and it doesn’t have a significant impact on the results.

It’s a different story if you are using a distance-based algorithm like k-nearest neighbors or a model that uses gradient descent like linear regression and neural networks.