In Support Vector Machines (SVM), feature scaling or normalization are not strictly required, but are highly recommended, as it can significantly improve model performance and convergence speed.

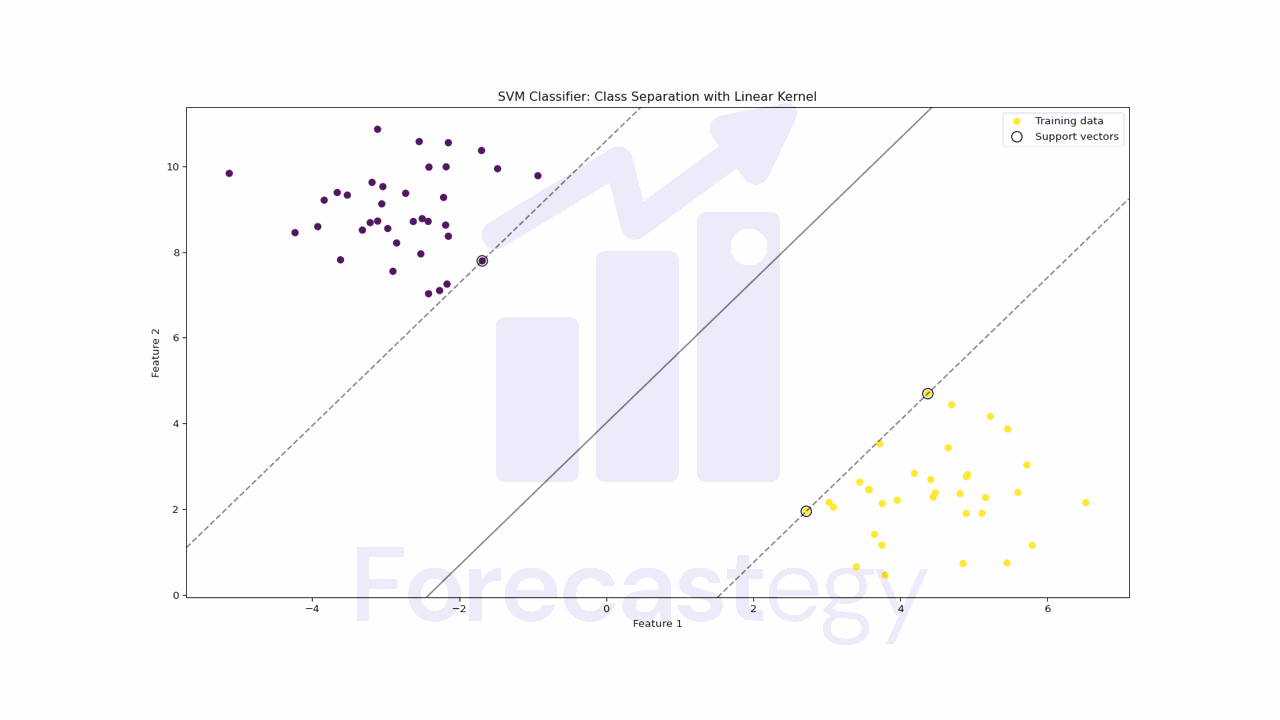

SVM tries to find the optimal hyperplane that separates the data points of different classes with the maximum margin.

If the features are on different scales, the hyperplane will be heavily influenced by the features with larger values, potentially leading to suboptimal results.

In this tutorial, we will explore the impact of feature scaling and normalization on SVM’s performance using the Red Wine dataset as an example.

Loading the Dataset

The Red Wine dataset is a popular dataset used to study classification and regression tasks in machine learning.

It contains data about chemical properties of red wine, such as acidity, pH, alcohol content, and the quality of the wine.

The goal is to use these characteristics to predict the quality of each wine as a classification problem, where the quality is discretized into classes.

import pandas as pd

url = "https://archive.ics.uci.edu/ml/machine-learning-databases/wine-quality/winequality-red.csv"

wine_data = pd.read_csv(url, sep=";")

| fixed acidity | volatile acidity | citric acid | residual sugar | chlorides | free sulfur dioxide | total sulfur dioxide | density | pH | sulphates | alcohol | quality |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 7.4 | 0.7 | 0 | 1.9 | 0.076 | 11 | 34 | 0.9978 | 3.51 | 0.56 | 9.4 | 5 |

| 7.8 | 0.88 | 0 | 2.6 | 0.098 | 25 | 67 | 0.9968 | 3.2 | 0.68 | 9.8 | 5 |

| 7.8 | 0.76 | 0.04 | 2.3 | 0.092 | 15 | 54 | 0.997 | 3.26 | 0.65 | 9.8 | 5 |

| 11.2 | 0.28 | 0.56 | 1.9 | 0.075 | 17 | 60 | 0.998 | 3.16 | 0.58 | 9.8 | 6 |

| 7.4 | 0.7 | 0 | 1.9 | 0.076 | 11 | 34 | 0.9978 | 3.51 | 0.56 | 9.4 | 5 |

We split the dataset into features and labels, and then split the data into training and test sets.

from sklearn.model_selection import train_test_split

X = wine_data.drop('quality', axis=1)

y = wine_data['quality']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

SVM Without Feature Scaling

First, we train an SVM model without feature scaling to use as a baseline.

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score

svm_clf = SVC(random_state=42)

svm_clf.fit(X_train, y_train)

y_pred_test_svm = svm_clf.predict(X_test)

accuracy_test_svm = accuracy_score(y_test, y_pred_test_svm)

print("SVM - without feature scaling")

print("Test accuracy:", accuracy_test_svm)

The code above first imports the necessary classes and functions, then creates an instance of the SVC class with a random state for reproducibility.

It fits the model to the training data and makes predictions for the test set.

Finally, it calculates and prints the accuracy score to evaluate the model’s performance.

The accuracy is 50.42%, which is better than random but not great.

A logistic regression model gets about 56.25% accuracy on this dataset, so I would expect an SVM model to perform better.

So, let’s try to improve the performance by scaling the features.

SVM With Feature Scaling

Let’s use the StandardScaler class from scikit-learn to scale the features.

This class standardizes the features by subtracting the mean and dividing by the standard deviation, which makes the features have a mean of 0 and a standard deviation of 1.

It’s the most popular method for feature scaling.

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

Notice that we fit only on the training data to avoid data leakage.

Now let’s train the model again.

svm_clf_scaled = SVC(random_state=42)

svm_clf_scaled.fit(X_train_scaled, y_train)

y_pred_test_svm_scaled = svm_clf_scaled.predict(X_test_scaled)

accuracy_test_svm_scaled = accuracy_score(y_test, y_pred_test_svm_scaled)

print("SVM - with feature scaling")

print("Test accuracy:", accuracy_test_svm_scaled)

The code above creates another instance of the SVC class but now it fits the model to the scaled training data and makes predictions for the scaled test set.

Finally, it calculates and prints the accuracy score for the test set.

The accuracy is 60.63%, which is better than the previous model’s performance, without feature scaling, and the logistic regression model.

Scikit-learn has other classes for feature scaling, such as MinMaxScaler and RobustScaler, so we may do even better by using one of those.

Let’s try MinMaxScaler first.

It works by subtracting the minimum value and dividing by the range, which makes the features have a minimum of 0 and a maximum of 1.

from sklearn.preprocessing import MinMaxScaler

minmax_scaler = MinMaxScaler()

X_train_minmax = minmax_scaler.fit_transform(X_train)

X_test_minmax = minmax_scaler.transform(X_test)

svm_clf_minmax = SVC(random_state=42)

svm_clf_minmax.fit(X_train_minmax, y_train)

y_pred_test_svm_minmax = svm_clf_minmax.predict(X_test_minmax)

accuracy_test_svm_minmax = accuracy_score(y_test, y_pred_test_svm_minmax)

print("SVM - with MinMaxScaler")

print("Test accuracy:", accuracy_test_svm_minmax)

The accuracy of this model is 58.33% which is better than no scaling but worse than standardization.

Let’s see if RobustScaler does better. It scales the features based on the median and interquartile range (IQR), making it robust to outliers.

from sklearn.preprocessing import RobustScaler

robust_scaler = RobustScaler()

X_train_robust = robust_scaler.fit_transform(X_train)

X_test_robust = robust_scaler.transform(X_test)

svm_clf_robust = SVC(random_state=42)

svm_clf_robust.fit(X_train_robust, y_train)

y_pred_test_svm_robust = svm_clf_robust.predict(X_test_robust)

accuracy_test_svm_robust = accuracy_score(y_test, y_pred_test_svm_robust)

print("SVM - with RobustScaler")

print("Test accuracy:", accuracy_test_svm_robust)

This method gets 59.79% accuracy, which is good, but still not as good as standardization.

In conclusion, SVM can benefit from feature scaling, and different scalers have different effects on the model’s performance.

It is essential to test various scaling techniques and choose the one that works best for your specific dataset and problem.

SVM With Normalization

Normalization is a type of feature scaling where the goal is to adjust the values of a feature vector to have a unit norm, i.e., the sum of the squares of the feature values equals 1.

It is often used when working with distance-based algorithms, such as k-Nearest Neighbors, to ensure that all features contribute equally to the distance calculation.

It’s done for each row instead of each column. So you don’t need all the column values to normalize a row, which can help avoid data leakage.

To apply normalization to the dataset, you can use the Normalizer class from scikit-learn:

from sklearn.preprocessing import Normalizer

normalizer = Normalizer()

X_train_normalized = normalizer.fit_transform(X_train)

X_test_normalized = normalizer.transform(X_test)

svm_clf_normalized = SVC(random_state=42)

svm_clf_normalized.fit(X_train_normalized, y_train)

y_pred_test_svm_normalized = svm_clf_normalized.predict(X_test_normalized)

accuracy_test_svm_normalized = accuracy_score(y_test, y_pred_test_svm_normalized)

print("SVM - with normalization")

print("Test accuracy:", accuracy_test_svm_normalized)

The accuracy of this model is 49.38%, which is worse than our SVM without feature scaling.

Keep in mind that, despite it not being the best method for this dataset, normalization is still useful in some cases.

So it’s important to add it to your toolbox and test it the next time you work with SVM on a new dataset.

Making a machine learning model perform better in practice sometimes resembles more alchemy than science.

Moving forward, what about XGBoost? Does it require feature scaling?